注意

单击此处下载完整的示例代码

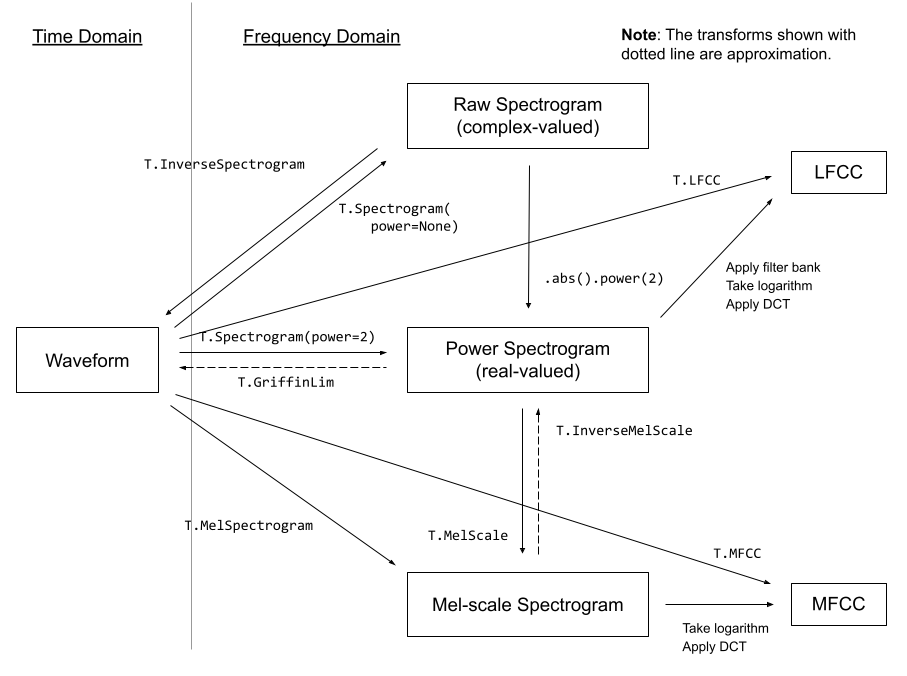

音频特征提取¶

torchaudio实现音频中常用的特征提取

域。它们在 和 中可用。torchaudio.functionaltorchaudio.transforms

functional将功能实现为独立函数。

他们是无国籍的。

transforms将功能实现为对象,

using implementations from 和 .

可以使用 TorchScript 对它们进行序列化。functionaltorch.nn.Module

import torch

import torchaudio

import torchaudio.functional as F

import torchaudio.transforms as T

print(torch.__version__)

print(torchaudio.__version__)

外:

1.12.0

0.12.0

制备¶

注意

在 Google Colab 中运行本教程时,请安装所需的软件包

!pip install librosa

from IPython.display import Audio

import librosa

import matplotlib.pyplot as plt

from torchaudio.utils import download_asset

torch.random.manual_seed(0)

SAMPLE_SPEECH = download_asset("tutorial-assets/Lab41-SRI-VOiCES-src-sp0307-ch127535-sg0042.wav")

def plot_waveform(waveform, sr, title="Waveform"):

waveform = waveform.numpy()

num_channels, num_frames = waveform.shape

time_axis = torch.arange(0, num_frames) / sr

figure, axes = plt.subplots(num_channels, 1)

axes.plot(time_axis, waveform[0], linewidth=1)

axes.grid(True)

figure.suptitle(title)

plt.show(block=False)

def plot_spectrogram(specgram, title=None, ylabel="freq_bin"):

fig, axs = plt.subplots(1, 1)

axs.set_title(title or "Spectrogram (db)")

axs.set_ylabel(ylabel)

axs.set_xlabel("frame")

im = axs.imshow(librosa.power_to_db(specgram), origin="lower", aspect="auto")

fig.colorbar(im, ax=axs)

plt.show(block=False)

def plot_fbank(fbank, title=None):

fig, axs = plt.subplots(1, 1)

axs.set_title(title or "Filter bank")

axs.imshow(fbank, aspect="auto")

axs.set_ylabel("frequency bin")

axs.set_xlabel("mel bin")

plt.show(block=False)

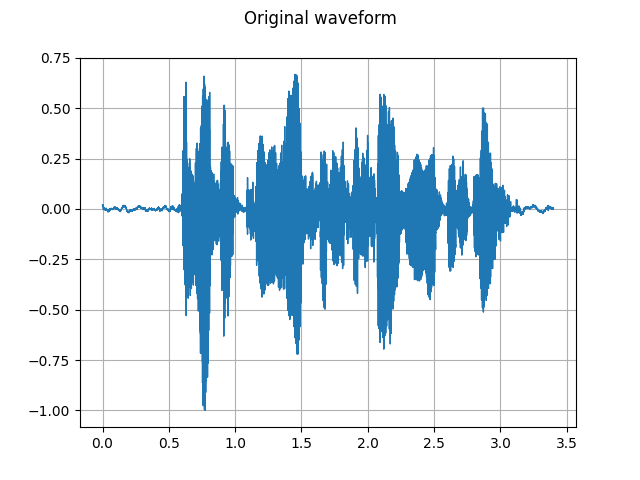

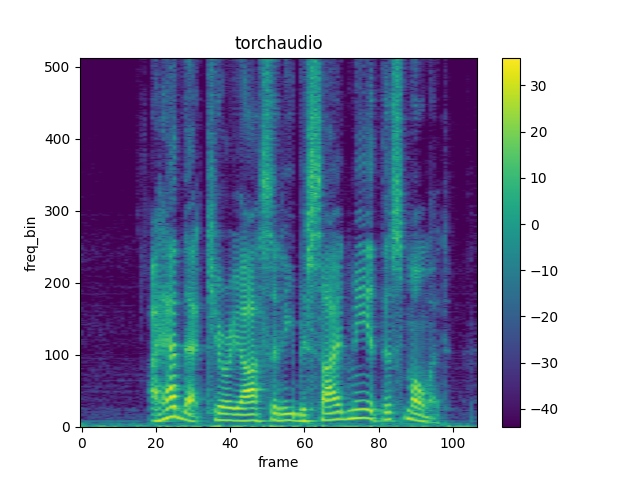

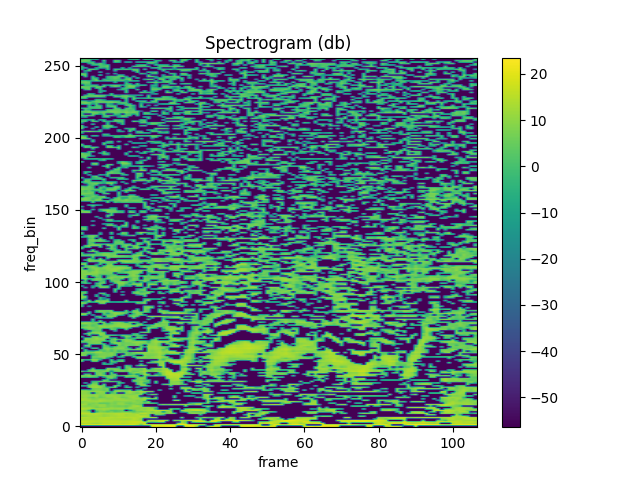

光谱图¶

要获取音频信号随时间变化的频率组成,

您可以使用torchaudio.transforms.Spectrogram().

SPEECH_WAVEFORM, SAMPLE_RATE = torchaudio.load(SAMPLE_SPEECH)

plot_waveform(SPEECH_WAVEFORM, SAMPLE_RATE, title="Original waveform")

Audio(SPEECH_WAVEFORM.numpy(), rate=SAMPLE_RATE)

n_fft = 1024

win_length = None

hop_length = 512

# Define transform

spectrogram = T.Spectrogram(

n_fft=n_fft,

win_length=win_length,

hop_length=hop_length,

center=True,

pad_mode="reflect",

power=2.0,

)

# Perform transform

spec = spectrogram(SPEECH_WAVEFORM)

plot_spectrogram(spec[0], title="torchaudio")

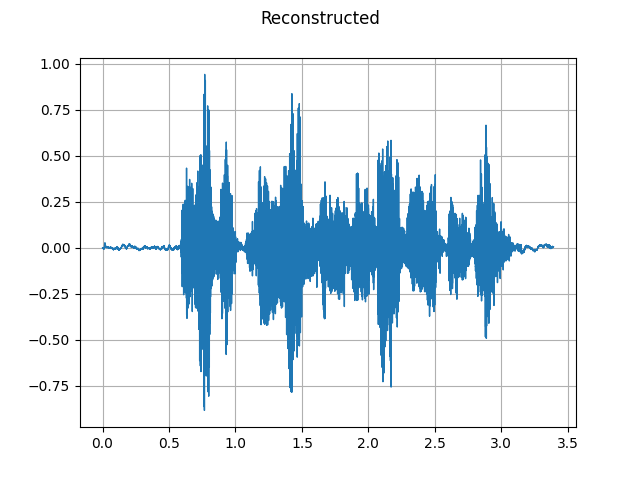

格里芬林¶

要从频谱图中恢复波形,可以使用 。GriffinLim

torch.random.manual_seed(0)

n_fft = 1024

win_length = None

hop_length = 512

spec = T.Spectrogram(

n_fft=n_fft,

win_length=win_length,

hop_length=hop_length,

)(SPEECH_WAVEFORM)

griffin_lim = T.GriffinLim(

n_fft=n_fft,

win_length=win_length,

hop_length=hop_length,

)

reconstructed_waveform = griffin_lim(spec)

plot_waveform(reconstructed_waveform, SAMPLE_RATE, title="Reconstructed")

Audio(reconstructed_waveform, rate=SAMPLE_RATE)

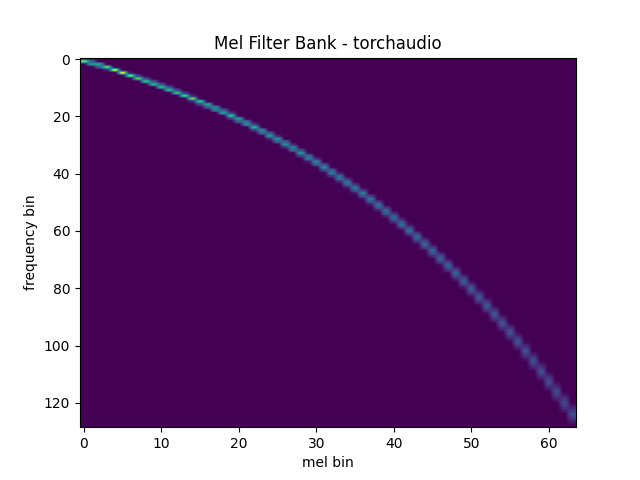

梅尔滤波器组¶

torchaudio.functional.melscale_fbanks()生成滤波器组

用于将频率 bin 转换为 mel-scale bin 。

由于此功能不需要输入音频/功能,因此没有

等效转换torchaudio.transforms().

n_fft = 256

n_mels = 64

sample_rate = 6000

mel_filters = F.melscale_fbanks(

int(n_fft // 2 + 1),

n_mels=n_mels,

f_min=0.0,

f_max=sample_rate / 2.0,

sample_rate=sample_rate,

norm="slaney",

)

plot_fbank(mel_filters, "Mel Filter Bank - torchaudio")

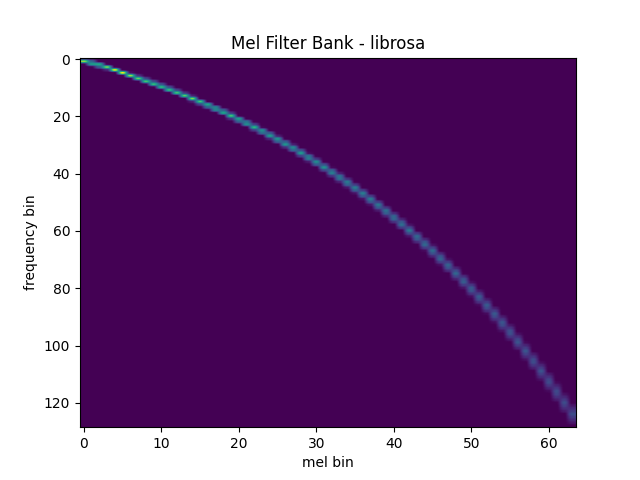

与 librosa 的比较¶

作为参考,这是获取 mel 滤波器组的等效方法

跟。librosa

mel_filters_librosa = librosa.filters.mel(

sr=sample_rate,

n_fft=n_fft,

n_mels=n_mels,

fmin=0.0,

fmax=sample_rate / 2.0,

norm="slaney",

htk=True,

).T

plot_fbank(mel_filters_librosa, "Mel Filter Bank - librosa")

mse = torch.square(mel_filters - mel_filters_librosa).mean().item()

print("Mean Square Difference: ", mse)

外:

Mean Square Difference: 3.84594449432978e-17

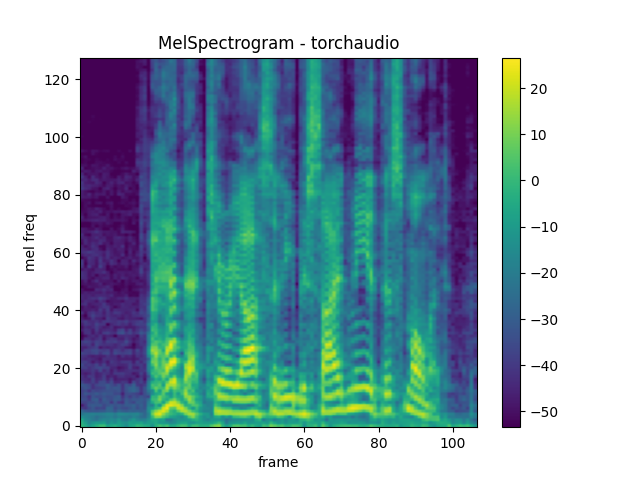

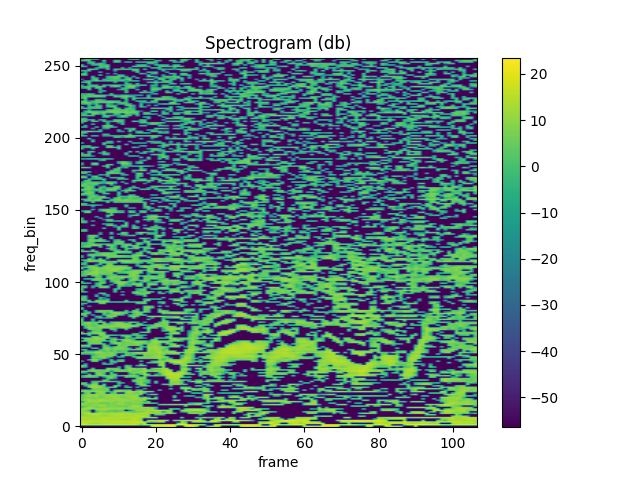

MelSpectrogram 梅尔频谱图¶

生成梅尔尺度频谱图涉及生成频谱图

以及执行 mel-scale 转换。在torchaudiotorchaudio.transforms.MelSpectrogram()提供

此功能。

n_fft = 1024

win_length = None

hop_length = 512

n_mels = 128

mel_spectrogram = T.MelSpectrogram(

sample_rate=sample_rate,

n_fft=n_fft,

win_length=win_length,

hop_length=hop_length,

center=True,

pad_mode="reflect",

power=2.0,

norm="slaney",

onesided=True,

n_mels=n_mels,

mel_scale="htk",

)

melspec = mel_spectrogram(SPEECH_WAVEFORM)

plot_spectrogram(melspec[0], title="MelSpectrogram - torchaudio", ylabel="mel freq")

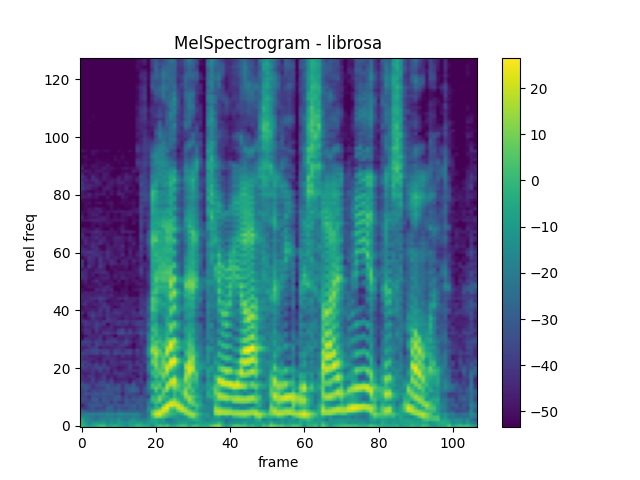

与 librosa 的比较¶

作为参考,以下是生成 mel-scale 的等效方法

具有 的频谱图 。librosa

melspec_librosa = librosa.feature.melspectrogram(

y=SPEECH_WAVEFORM.numpy()[0],

sr=sample_rate,

n_fft=n_fft,

hop_length=hop_length,

win_length=win_length,

center=True,

pad_mode="reflect",

power=2.0,

n_mels=n_mels,

norm="slaney",

htk=True,

)

plot_spectrogram(melspec_librosa, title="MelSpectrogram - librosa", ylabel="mel freq")

mse = torch.square(melspec - melspec_librosa).mean().item()

print("Mean Square Difference: ", mse)

外:

Mean Square Difference: 1.0186037568971074e-09

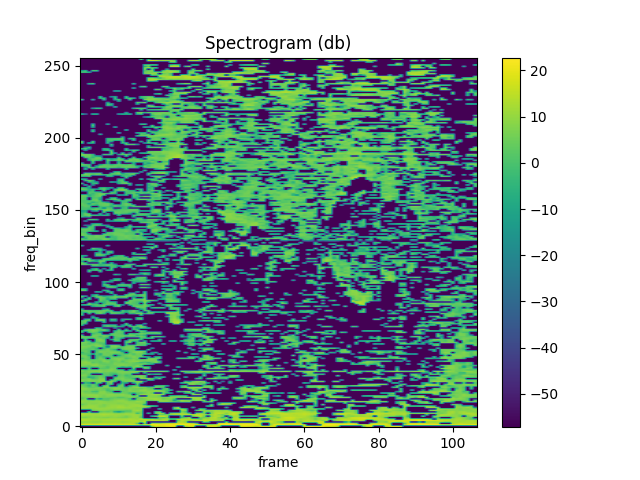

MFCC¶

n_fft = 2048

win_length = None

hop_length = 512

n_mels = 256

n_mfcc = 256

mfcc_transform = T.MFCC(

sample_rate=sample_rate,

n_mfcc=n_mfcc,

melkwargs={

"n_fft": n_fft,

"n_mels": n_mels,

"hop_length": hop_length,

"mel_scale": "htk",

},

)

mfcc = mfcc_transform(SPEECH_WAVEFORM)

plot_spectrogram(mfcc[0])

与 librosa 的比较¶

melspec = librosa.feature.melspectrogram(

y=SPEECH_WAVEFORM.numpy()[0],

sr=sample_rate,

n_fft=n_fft,

win_length=win_length,

hop_length=hop_length,

n_mels=n_mels,

htk=True,

norm=None,

)

mfcc_librosa = librosa.feature.mfcc(

S=librosa.core.spectrum.power_to_db(melspec),

n_mfcc=n_mfcc,

dct_type=2,

norm="ortho",

)

plot_spectrogram(mfcc_librosa)

mse = torch.square(mfcc - mfcc_librosa).mean().item()

print("Mean Square Difference: ", mse)

外:

Mean Square Difference: 0.8103954195976257

LFCC 公司¶

n_fft = 2048

win_length = None

hop_length = 512

n_lfcc = 256

lfcc_transform = T.LFCC(

sample_rate=sample_rate,

n_lfcc=n_lfcc,

speckwargs={

"n_fft": n_fft,

"win_length": win_length,

"hop_length": hop_length,

},

)

lfcc = lfcc_transform(SPEECH_WAVEFORM)

plot_spectrogram(lfcc[0])

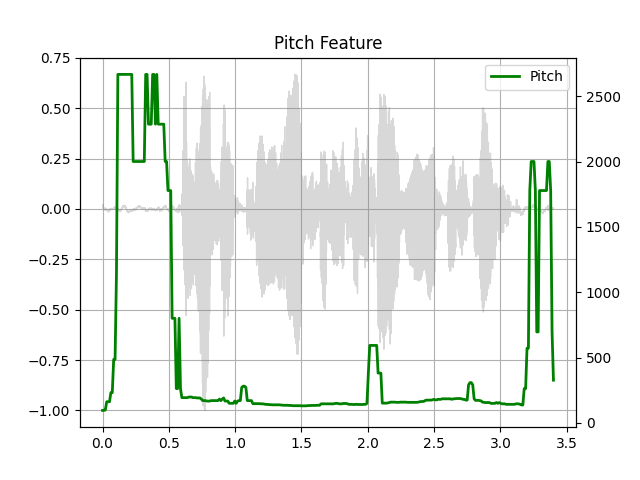

投¶

pitch = F.detect_pitch_frequency(SPEECH_WAVEFORM, SAMPLE_RATE)

def plot_pitch(waveform, sr, pitch):

figure, axis = plt.subplots(1, 1)

axis.set_title("Pitch Feature")

axis.grid(True)

end_time = waveform.shape[1] / sr

time_axis = torch.linspace(0, end_time, waveform.shape[1])

axis.plot(time_axis, waveform[0], linewidth=1, color="gray", alpha=0.3)

axis2 = axis.twinx()

time_axis = torch.linspace(0, end_time, pitch.shape[1])

axis2.plot(time_axis, pitch[0], linewidth=2, label="Pitch", color="green")

axis2.legend(loc=0)

plt.show(block=False)

plot_pitch(SPEECH_WAVEFORM, SAMPLE_RATE, pitch)

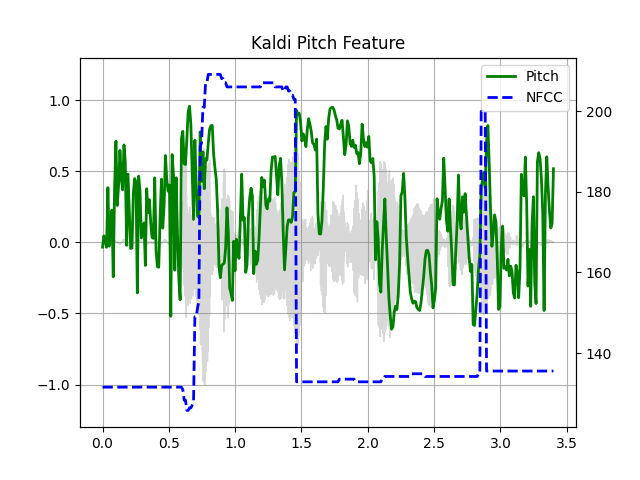

Kaldi Pitch(测试版)¶

Kaldi Pitch 功能 [1] 是一种针对自动调整的 Pitch 检测机制

语音识别 (ASR) 应用程序。这是 中的一个 beta 功能。

它以torchaudiotorchaudio.functional.compute_kaldi_pitch().

针对自动语音识别进行调整的音高提取算法

Ghahremani、B. BabaAli、D. Povey、K. Riedhammer、J. Trmal 和 S. 库丹普尔

2014 IEEE声学、语音与信号国际会议 加工 (ICASSP),佛罗伦萨,2014 年,第 2494-2498 页,doi: 10.1109/ICASSP.2014.6854049。 [摘要], [论文]

pitch_feature = F.compute_kaldi_pitch(SPEECH_WAVEFORM, SAMPLE_RATE)

pitch, nfcc = pitch_feature[..., 0], pitch_feature[..., 1]

def plot_kaldi_pitch(waveform, sr, pitch, nfcc):

_, axis = plt.subplots(1, 1)

axis.set_title("Kaldi Pitch Feature")

axis.grid(True)

end_time = waveform.shape[1] / sr

time_axis = torch.linspace(0, end_time, waveform.shape[1])

axis.plot(time_axis, waveform[0], linewidth=1, color="gray", alpha=0.3)

time_axis = torch.linspace(0, end_time, pitch.shape[1])

ln1 = axis.plot(time_axis, pitch[0], linewidth=2, label="Pitch", color="green")

axis.set_ylim((-1.3, 1.3))

axis2 = axis.twinx()

time_axis = torch.linspace(0, end_time, nfcc.shape[1])

ln2 = axis2.plot(time_axis, nfcc[0], linewidth=2, label="NFCC", color="blue", linestyle="--")

lns = ln1 + ln2

labels = [l.get_label() for l in lns]

axis.legend(lns, labels, loc=0)

plt.show(block=False)

plot_kaldi_pitch(SPEECH_WAVEFORM, SAMPLE_RATE, pitch, nfcc)

脚本总运行时间:(0 分 5.984 秒)