注意

单击此处下载完整的示例代码

音频 I/O¶

torchaudio集成并提供丰富的音频 I/O。libsox

# When running this tutorial in Google Colab, install the required packages

# with the following.

# !pip install torchaudio boto3

import torch

import torchaudio

print(torch.__version__)

print(torchaudio.__version__)

外:

1.10.0+cpu

0.10.0+cpu

准备数据和实用程序函数(跳过本节)¶

#@title Prepare data and utility functions. {display-mode: "form"}

#@markdown

#@markdown You do not need to look into this cell.

#@markdown Just execute once and you are good to go.

#@markdown

#@markdown In this tutorial, we will use a speech data from [VOiCES dataset](https://iqtlabs.github.io/voices/), which is licensed under Creative Commos BY 4.0.

import io

import os

import requests

import tarfile

import boto3

from botocore import UNSIGNED

from botocore.config import Config

import matplotlib.pyplot as plt

from IPython.display import Audio, display

_SAMPLE_DIR = "_assets"

SAMPLE_WAV_URL = "https://pytorch-tutorial-assets.s3.amazonaws.com/steam-train-whistle-daniel_simon.wav"

SAMPLE_WAV_PATH = os.path.join(_SAMPLE_DIR, "steam.wav")

SAMPLE_MP3_URL = "https://pytorch-tutorial-assets.s3.amazonaws.com/steam-train-whistle-daniel_simon.mp3"

SAMPLE_MP3_PATH = os.path.join(_SAMPLE_DIR, "steam.mp3")

SAMPLE_GSM_URL = "https://pytorch-tutorial-assets.s3.amazonaws.com/steam-train-whistle-daniel_simon.gsm"

SAMPLE_GSM_PATH = os.path.join(_SAMPLE_DIR, "steam.gsm")

SAMPLE_WAV_SPEECH_URL = "https://pytorch-tutorial-assets.s3.amazonaws.com/VOiCES_devkit/source-16k/train/sp0307/Lab41-SRI-VOiCES-src-sp0307-ch127535-sg0042.wav"

SAMPLE_WAV_SPEECH_PATH = os.path.join(_SAMPLE_DIR, "speech.wav")

SAMPLE_TAR_URL = "https://pytorch-tutorial-assets.s3.amazonaws.com/VOiCES_devkit.tar.gz"

SAMPLE_TAR_PATH = os.path.join(_SAMPLE_DIR, "sample.tar.gz")

SAMPLE_TAR_ITEM = "VOiCES_devkit/source-16k/train/sp0307/Lab41-SRI-VOiCES-src-sp0307-ch127535-sg0042.wav"

S3_BUCKET = "pytorch-tutorial-assets"

S3_KEY = "VOiCES_devkit/source-16k/train/sp0307/Lab41-SRI-VOiCES-src-sp0307-ch127535-sg0042.wav"

def _fetch_data():

os.makedirs(_SAMPLE_DIR, exist_ok=True)

uri = [

(SAMPLE_WAV_URL, SAMPLE_WAV_PATH),

(SAMPLE_MP3_URL, SAMPLE_MP3_PATH),

(SAMPLE_GSM_URL, SAMPLE_GSM_PATH),

(SAMPLE_WAV_SPEECH_URL, SAMPLE_WAV_SPEECH_PATH),

(SAMPLE_TAR_URL, SAMPLE_TAR_PATH),

]

for url, path in uri:

with open(path, 'wb') as file_:

file_.write(requests.get(url).content)

_fetch_data()

def print_stats(waveform, sample_rate=None, src=None):

if src:

print("-" * 10)

print("Source:", src)

print("-" * 10)

if sample_rate:

print("Sample Rate:", sample_rate)

print("Shape:", tuple(waveform.shape))

print("Dtype:", waveform.dtype)

print(f" - Max: {waveform.max().item():6.3f}")

print(f" - Min: {waveform.min().item():6.3f}")

print(f" - Mean: {waveform.mean().item():6.3f}")

print(f" - Std Dev: {waveform.std().item():6.3f}")

print()

print(waveform)

print()

def plot_waveform(waveform, sample_rate, title="Waveform", xlim=None, ylim=None):

waveform = waveform.numpy()

num_channels, num_frames = waveform.shape

time_axis = torch.arange(0, num_frames) / sample_rate

figure, axes = plt.subplots(num_channels, 1)

if num_channels == 1:

axes = [axes]

for c in range(num_channels):

axes[c].plot(time_axis, waveform[c], linewidth=1)

axes[c].grid(True)

if num_channels > 1:

axes[c].set_ylabel(f'Channel {c+1}')

if xlim:

axes[c].set_xlim(xlim)

if ylim:

axes[c].set_ylim(ylim)

figure.suptitle(title)

plt.show(block=False)

def plot_specgram(waveform, sample_rate, title="Spectrogram", xlim=None):

waveform = waveform.numpy()

num_channels, num_frames = waveform.shape

time_axis = torch.arange(0, num_frames) / sample_rate

figure, axes = plt.subplots(num_channels, 1)

if num_channels == 1:

axes = [axes]

for c in range(num_channels):

axes[c].specgram(waveform[c], Fs=sample_rate)

if num_channels > 1:

axes[c].set_ylabel(f'Channel {c+1}')

if xlim:

axes[c].set_xlim(xlim)

figure.suptitle(title)

plt.show(block=False)

def play_audio(waveform, sample_rate):

waveform = waveform.numpy()

num_channels, num_frames = waveform.shape

if num_channels == 1:

display(Audio(waveform[0], rate=sample_rate))

elif num_channels == 2:

display(Audio((waveform[0], waveform[1]), rate=sample_rate))

else:

raise ValueError("Waveform with more than 2 channels are not supported.")

def _get_sample(path, resample=None):

effects = [

["remix", "1"]

]

if resample:

effects.extend([

["lowpass", f"{resample // 2}"],

["rate", f'{resample}'],

])

return torchaudio.sox_effects.apply_effects_file(path, effects=effects)

def get_sample(*, resample=None):

return _get_sample(SAMPLE_WAV_PATH, resample=resample)

def inspect_file(path):

print("-" * 10)

print("Source:", path)

print("-" * 10)

print(f" - File size: {os.path.getsize(path)} bytes")

print(f" - {torchaudio.info(path)}")

查询音频元数据¶

函数获取音频元数据。您可以提供

路径类对象或类文件对象。torchaudio.info

metadata = torchaudio.info(SAMPLE_WAV_PATH)

print(metadata)

外:

AudioMetaData(sample_rate=44100, num_frames=109368, num_channels=2, bits_per_sample=16, encoding=PCM_S)

哪里

sample_rate是音频的采样率num_channels是通道数num_frames是每个通道的帧数bits_per_sample是位深度encoding是示例编码格式

encoding可以采用以下值之一:

"PCM_S": 有符号整数线性 PCM"PCM_U":无符号整数线性 PCM"PCM_F": 浮点线性 PCM"FLAC": Flac,免费无损音频 编 解码 器"ULAW": 穆罗, [维基百科]"ALAW": A-law [维基百科]"MP3":MP3、MPEG-1 音频层 III"VORBIS": OGG Vorbis [xiph.org]"AMR_NB":自适应多速率 [维基百科]"AMR_WB": 自适应多速率宽带 [维基百科]"OPUS": 作品 [opus-codec.org]"GSM": GSM-FR [维基百科]"UNKNOWN"以上都不是

注意

bits_per_sample可用于具有压缩和/或 可变比特率(如 MP3)。0num_frames可以是 GSM-FR 格式。0

metadata = torchaudio.info(SAMPLE_MP3_PATH)

print(metadata)

metadata = torchaudio.info(SAMPLE_GSM_PATH)

print(metadata)

外:

AudioMetaData(sample_rate=44100, num_frames=110559, num_channels=2, bits_per_sample=0, encoding=MP3)

AudioMetaData(sample_rate=8000, num_frames=0, num_channels=1, bits_per_sample=0, encoding=GSM)

查询类文件对象¶

info适用于类文件对象。

print("Source:", SAMPLE_WAV_URL)

with requests.get(SAMPLE_WAV_URL, stream=True) as response:

metadata = torchaudio.info(response.raw)

print(metadata)

外:

Source: https://pytorch-tutorial-assets.s3.amazonaws.com/steam-train-whistle-daniel_simon.wav

AudioMetaData(sample_rate=44100, num_frames=109368, num_channels=2, bits_per_sample=16, encoding=PCM_S)

注意当传递一个类似文件的对象时,不会读取

所有基础数据;相反,它只读取一部分

的数据。

因此,对于给定的音频格式,它可能无法检索

正确的元数据,包括格式本身。

下面的示例对此进行了说明。info

使用 argument 指定输入的音频格式。

format返回的元数据具有

num_frames = 0

print("Source:", SAMPLE_MP3_URL)

with requests.get(SAMPLE_MP3_URL, stream=True) as response:

metadata = torchaudio.info(response.raw, format="mp3")

print(f"Fetched {response.raw.tell()} bytes.")

print(metadata)

外:

Source: https://pytorch-tutorial-assets.s3.amazonaws.com/steam-train-whistle-daniel_simon.mp3

Fetched 8192 bytes.

AudioMetaData(sample_rate=44100, num_frames=0, num_channels=2, bits_per_sample=0, encoding=MP3)

将音频数据加载到 Tensor 中¶

要加载音频数据,您可以使用 .torchaudio.load

此函数接受 path-like object 或 file-like object 作为 input。

返回值是 waveform () 和 sample rate 的元组

().Tensorint

默认情况下,生成的 tensor 对象具有 和

其值范围在 中标准化。dtype=torch.float32[-1.0, 1.0]

支持的格式列表请参考 torchaudio 文档。

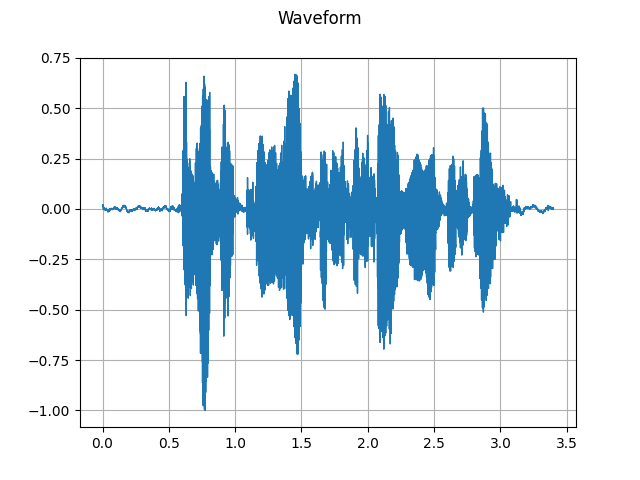

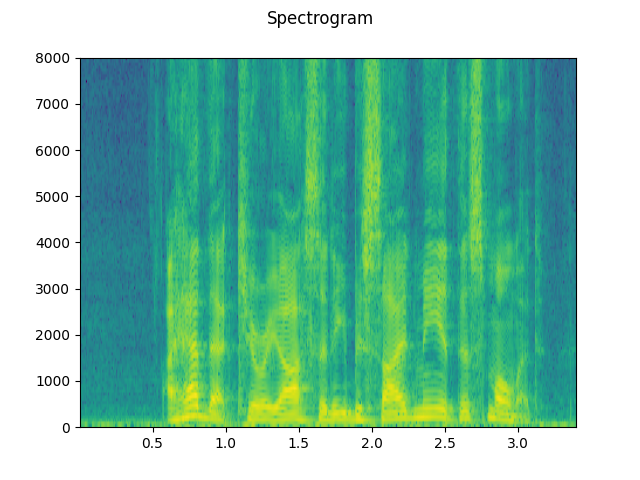

waveform, sample_rate = torchaudio.load(SAMPLE_WAV_SPEECH_PATH)

print_stats(waveform, sample_rate=sample_rate)

plot_waveform(waveform, sample_rate)

plot_specgram(waveform, sample_rate)

play_audio(waveform, sample_rate)

外:

Sample Rate: 16000

Shape: (1, 54400)

Dtype: torch.float32

- Max: 0.668

- Min: -1.000

- Mean: 0.000

- Std Dev: 0.122

tensor([[0.0183, 0.0180, 0.0180, ..., 0.0018, 0.0019, 0.0032]])

<IPython.lib.display.Audio object>

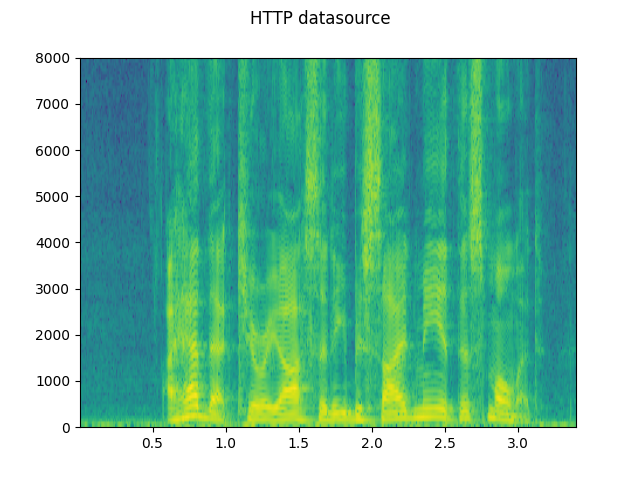

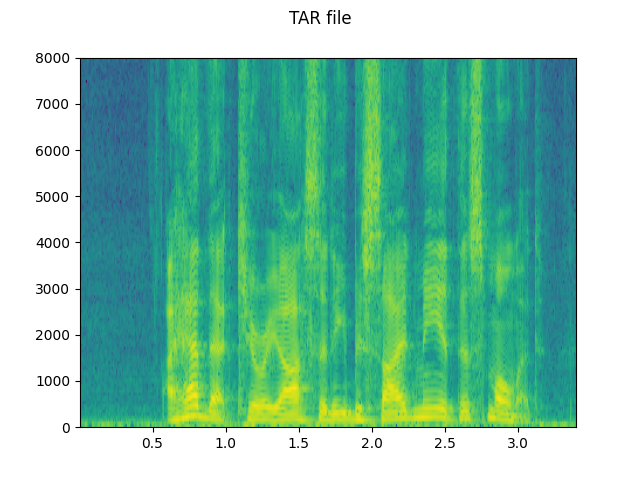

从类文件对象加载¶

torchaudio的 I/O 函数现在支持类似文件的对象。这

允许从位置获取和解码音频数据

在本地文件系统内部和外部。

以下示例对此进行了说明。

# Load audio data as HTTP request

with requests.get(SAMPLE_WAV_SPEECH_URL, stream=True) as response:

waveform, sample_rate = torchaudio.load(response.raw)

plot_specgram(waveform, sample_rate, title="HTTP datasource")

# Load audio from tar file

with tarfile.open(SAMPLE_TAR_PATH, mode='r') as tarfile_:

fileobj = tarfile_.extractfile(SAMPLE_TAR_ITEM)

waveform, sample_rate = torchaudio.load(fileobj)

plot_specgram(waveform, sample_rate, title="TAR file")

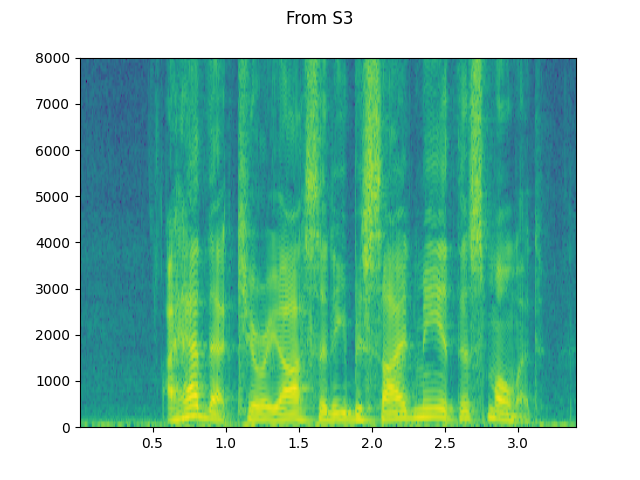

# Load audio from S3

client = boto3.client('s3', config=Config(signature_version=UNSIGNED))

response = client.get_object(Bucket=S3_BUCKET, Key=S3_KEY)

waveform, sample_rate = torchaudio.load(response['Body'])

plot_specgram(waveform, sample_rate, title="From S3")

切片提示¶

提供和参数限制

decoding 到 input 的相应段。num_framesframe_offset

使用 vanilla Tensor 切片可以获得相同的结果,

(即 )。然而

提供和参数更多

有效。waveform[:, frame_offset:frame_offset+num_frames]num_framesframe_offset

这是因为该函数将结束数据采集和解码 一旦它完成对请求的帧的解码。这是有利的 当音频数据通过网络传输时,数据传输将 stop 一旦获取到必要的数据量。

下面的示例对此进行了说明。

# Illustration of two different decoding methods.

# The first one will fetch all the data and decode them, while

# the second one will stop fetching data once it completes decoding.

# The resulting waveforms are identical.

frame_offset, num_frames = 16000, 16000 # Fetch and decode the 1 - 2 seconds

print("Fetching all the data...")

with requests.get(SAMPLE_WAV_SPEECH_URL, stream=True) as response:

waveform1, sample_rate1 = torchaudio.load(response.raw)

waveform1 = waveform1[:, frame_offset:frame_offset+num_frames]

print(f" - Fetched {response.raw.tell()} bytes")

print("Fetching until the requested frames are available...")

with requests.get(SAMPLE_WAV_SPEECH_URL, stream=True) as response:

waveform2, sample_rate2 = torchaudio.load(

response.raw, frame_offset=frame_offset, num_frames=num_frames)

print(f" - Fetched {response.raw.tell()} bytes")

print("Checking the resulting waveform ... ", end="")

assert (waveform1 == waveform2).all()

print("matched!")

外:

Fetching all the data...

- Fetched 108844 bytes

Fetching until the requested frames are available...

- Fetched 65580 bytes

Checking the resulting waveform ... matched!

将音频保存到文件¶

要将音频数据保存为常见应用程序可解释的格式,

您可以使用 .torchaudio.save

此函数接受 path-like object 或 file-like object。

在传递类似文件的对象时,你还需要提供参数,以便函数知道它应该使用哪种格式。在

对于路径类对象,该函数将从

扩展。如果要保存到没有扩展名的文件,则需要

以提供参数 。formatformat

保存 WAV 格式的数据时,Tensor 的默认编码

是 32 位浮点 PCM。您可以提供参数并更改此行为。例如,要保存数据

在 16 位有符号整数 PCM 中,您可以执行以下作。float32encodingbits_per_sample

注意以较低位深度的编码保存数据会减少 生成的文件大小以及精度。

waveform, sample_rate = get_sample()

print_stats(waveform, sample_rate=sample_rate)

# Save without any encoding option.

# The function will pick up the encoding which

# the provided data fit

path = f"{_SAMPLE_DIR}/save_example_default.wav"

torchaudio.save(path, waveform, sample_rate)

inspect_file(path)

# Save as 16-bit signed integer Linear PCM

# The resulting file occupies half the storage but loses precision

path = f"{_SAMPLE_DIR}/save_example_PCM_S16.wav"

torchaudio.save(

path, waveform, sample_rate,

encoding="PCM_S", bits_per_sample=16)

inspect_file(path)

外:

Sample Rate: 44100

Shape: (1, 109368)

Dtype: torch.float32

- Max: 0.508

- Min: -0.449

- Mean: -0.000

- Std Dev: 0.122

tensor([[0.0027, 0.0063, 0.0092, ..., 0.0032, 0.0047, 0.0052]])

----------

Source: _assets/save_example_default.wav

----------

- File size: 437530 bytes

- AudioMetaData(sample_rate=44100, num_frames=109368, num_channels=1, bits_per_sample=32, encoding=PCM_F)

----------

Source: _assets/save_example_PCM_S16.wav

----------

- File size: 218780 bytes

- AudioMetaData(sample_rate=44100, num_frames=109368, num_channels=1, bits_per_sample=16, encoding=PCM_S)

torchaudio.save也可以处理其他格式。仅举几例:

waveform, sample_rate = get_sample(resample=8000)

formats = [

"mp3",

"flac",

"vorbis",

"sph",

"amb",

"amr-nb",

"gsm",

]

for format in formats:

path = f"{_SAMPLE_DIR}/save_example.{format}"

torchaudio.save(path, waveform, sample_rate, format=format)

inspect_file(path)

外:

----------

Source: _assets/save_example.mp3

----------

- File size: 2664 bytes

- AudioMetaData(sample_rate=8000, num_frames=21312, num_channels=1, bits_per_sample=0, encoding=MP3)

----------

Source: _assets/save_example.flac

----------

- File size: 47315 bytes

- AudioMetaData(sample_rate=8000, num_frames=19840, num_channels=1, bits_per_sample=24, encoding=FLAC)

----------

Source: _assets/save_example.vorbis

----------

- File size: 9967 bytes

- AudioMetaData(sample_rate=8000, num_frames=19840, num_channels=1, bits_per_sample=0, encoding=VORBIS)

----------

Source: _assets/save_example.sph

----------

- File size: 80384 bytes

- AudioMetaData(sample_rate=8000, num_frames=19840, num_channels=1, bits_per_sample=32, encoding=PCM_S)

----------

Source: _assets/save_example.amb

----------

- File size: 79418 bytes

- AudioMetaData(sample_rate=8000, num_frames=19840, num_channels=1, bits_per_sample=32, encoding=PCM_F)

----------

Source: _assets/save_example.amr-nb

----------

- File size: 1618 bytes

- AudioMetaData(sample_rate=8000, num_frames=19840, num_channels=1, bits_per_sample=0, encoding=AMR_NB)

----------

Source: _assets/save_example.gsm

----------

- File size: 4092 bytes

- AudioMetaData(sample_rate=8000, num_frames=0, num_channels=1, bits_per_sample=0, encoding=GSM)

存储为类似文件的对象¶

与其他 I/O 功能类似,您可以将音频保存为类似 file 的

对象。当保存到类文件对象时,参数为

必填。format

waveform, sample_rate = get_sample()

# Saving to bytes buffer

buffer_ = io.BytesIO()

torchaudio.save(buffer_, waveform, sample_rate, format="wav")

buffer_.seek(0)

print(buffer_.read(16))

外:

b'RIFF\x12\xad\x06\x00WAVEfmt '

脚本总运行时间:(0 分 2.110 秒)