注意

单击此处下载完整的示例代码

使用 Tacotron2 的文本转语音¶

import IPython

import matplotlib

import matplotlib.pyplot as plt

概述¶

本教程介绍如何使用 在 torchaudio 中预训练 Tacotron2。

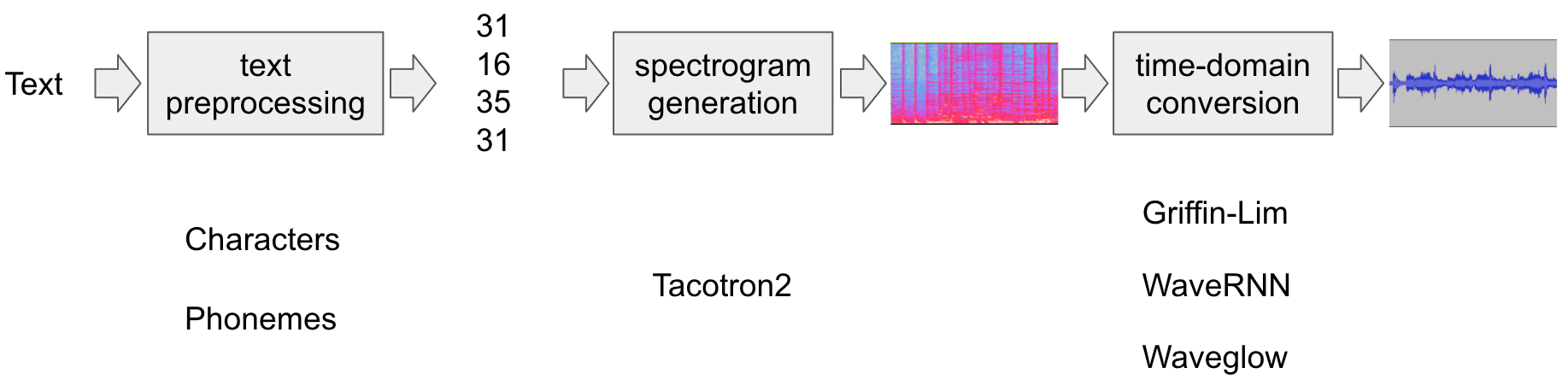

文本转语音管道如下所示:

文本预处理

首先,将输入文本编码为元件列表。在这个 教程中,我们将使用英文字符和音素作为符号。

频谱图生成

从编码的文本中,生成频谱图。为此,我们使用 model。

Tacotron2时域转换

最后一步是将频谱图转换为波形。这 从频谱图生成语音的过程也称为 Vocoder。 在本教程中,使用了三种不同的声码器,WaveRNN、Griffin-Lim、 和 Nvidia 的 WaveGlow。

整个过程如下图所示。

所有相关组件都捆绑在torchaudio.pipelines.Tacotron2TTSBundle(),

但本教程还将介绍幕后过程。

制备¶

首先,我们安装必要的依赖项。除了 之外,还需要执行基于音素的

编码。torchaudioDeepPhonemizer

# When running this example in notebook, install DeepPhonemizer

# !pip3 install deep_phonemizer

import torch

import torchaudio

matplotlib.rcParams["figure.figsize"] = [16.0, 4.8]

torch.random.manual_seed(0)

device = "cuda" if torch.cuda.is_available() else "cpu"

print(torch.__version__)

print(torchaudio.__version__)

print(device)

外:

1.11.0+cpu

0.11.0+cpu

cpu

文本处理¶

基于字符的编码¶

在本节中,我们将介绍如何使用基于字符的编码 工程。

由于预训练的 Tacotron2 模型需要一组特定的符号

表中,其功能与 中提供的功能相同。这

部分更多地用于解释编码的基础。torchaudio

首先,我们定义品种集。例如,我们可以使用 .然后,我们将映射

输入文本的每个字符都放入相应的

符号。'_-!\'(),.:;? abcdefghijklmnopqrstuvwxyz'

以下是此类处理的示例。在示例中,symbol 不在表中的 API 的 URL 将被忽略。

外:

[19, 16, 23, 23, 26, 11, 34, 26, 29, 23, 15, 2, 11, 31, 16, 35, 31, 11, 31, 26, 11, 30, 27, 16, 16, 14, 19, 2]

如上所述,symbol table 和 indices 必须匹配

预训练的 Tacotron2 模型期望什么。 提供

transform 与预训练模型一起。例如,您可以

instantiate 并使用 type 的 Transform,如下所示。torchaudio

外:

tensor([[19, 16, 23, 23, 26, 11, 34, 26, 29, 23, 15, 2, 11, 31, 16, 35, 31, 11,

31, 26, 11, 30, 27, 16, 16, 14, 19, 2]])

tensor([28], dtype=torch.int32)

该对象将文本或文本列表作为输入。

当提供文本列表时,返回的变量

表示输出中每个已处理令牌的有效长度

批。processorlengths

可以按如下方式检索中间表示。

外:

['h', 'e', 'l', 'l', 'o', ' ', 'w', 'o', 'r', 'l', 'd', '!', ' ', 't', 'e', 'x', 't', ' ', 't', 'o', ' ', 's', 'p', 'e', 'e', 'c', 'h', '!']

基于音素的编码¶

基于音素的编码类似于基于字符的编码,但它 使用基于音素的符号表和 G2P (字素到音素) 型。

G2P 模型的细节不在本教程的讨论范围之内,我们将 看看转换是什么样子的就知道了。

与基于字符的编码类似,编码过程是

预期与预先训练的 Tacotron2 模型的训练对象相匹配。 具有用于创建流程的接口。torchaudio

下面的代码说明了如何创建和使用该过程。后

场景中,使用 package 创建 G2P 模型,并且

作者发布的预训练权重 is

获取。DeepPhonemizerDeepPhonemizer

外:

0%| | 0.00/63.6M [00:00<?, ?B/s]

0%| | 56.0k/63.6M [00:00<03:27, 322kB/s]

0%| | 208k/63.6M [00:00<01:43, 645kB/s]

1%|1 | 696k/63.6M [00:00<00:40, 1.63MB/s]

4%|3 | 2.52M/63.6M [00:00<00:12, 5.24MB/s]

7%|6 | 4.27M/63.6M [00:00<00:08, 7.05MB/s]

10%|9 | 6.07M/63.6M [00:01<00:07, 8.23MB/s]

12%|#2 | 7.91M/63.6M [00:01<00:06, 9.08MB/s]

15%|#5 | 9.79M/63.6M [00:01<00:05, 9.69MB/s]

18%|#8 | 11.7M/63.6M [00:01<00:05, 10.2MB/s]

21%|##1 | 13.7M/63.6M [00:01<00:04, 10.6MB/s]

25%|##4 | 15.7M/63.6M [00:01<00:04, 10.9MB/s]

28%|##7 | 17.7M/63.6M [00:02<00:04, 11.2MB/s]

31%|###1 | 19.7M/63.6M [00:02<00:03, 12.2MB/s]

33%|###2 | 20.9M/63.6M [00:02<00:03, 11.6MB/s]

36%|###5 | 22.9M/63.6M [00:02<00:03, 12.5MB/s]

38%|###7 | 24.1M/63.6M [00:02<00:03, 11.7MB/s]

41%|#### | 26.1M/63.6M [00:02<00:03, 12.7MB/s]

43%|####2 | 27.3M/63.6M [00:02<00:03, 11.9MB/s]

46%|####6 | 29.3M/63.6M [00:03<00:02, 13.0MB/s]

48%|####8 | 30.5M/63.6M [00:03<00:02, 12.1MB/s]

51%|#####1 | 32.6M/63.6M [00:03<00:02, 13.2MB/s]

53%|#####3 | 33.9M/63.6M [00:03<00:02, 12.3MB/s]

57%|#####6 | 36.0M/63.6M [00:03<00:02, 13.4MB/s]

59%|#####8 | 37.2M/63.6M [00:03<00:02, 12.5MB/s]

62%|######1 | 39.4M/63.6M [00:03<00:01, 13.6MB/s]

64%|######3 | 40.7M/63.6M [00:04<00:01, 12.7MB/s]

67%|######7 | 42.8M/63.6M [00:04<00:01, 13.9MB/s]

69%|######9 | 44.1M/63.6M [00:04<00:01, 12.8MB/s]

73%|#######2 | 46.3M/63.6M [00:04<00:01, 14.0MB/s]

75%|#######4 | 47.6M/63.6M [00:04<00:01, 13.0MB/s]

78%|#######8 | 49.8M/63.6M [00:04<00:01, 14.1MB/s]

80%|######## | 51.2M/63.6M [00:04<00:00, 13.1MB/s]

84%|########3 | 53.4M/63.6M [00:04<00:00, 14.3MB/s]

86%|########5 | 54.7M/63.6M [00:05<00:00, 13.2MB/s]

89%|########9 | 56.9M/63.6M [00:05<00:00, 14.4MB/s]

92%|#########1| 58.3M/63.6M [00:05<00:00, 13.4MB/s]

95%|#########5| 60.5M/63.6M [00:05<00:00, 14.5MB/s]

97%|#########7| 61.9M/63.6M [00:05<00:00, 13.4MB/s]

100%|##########| 63.6M/63.6M [00:05<00:00, 11.6MB/s]

tensor([[54, 20, 65, 69, 11, 92, 44, 65, 38, 2, 11, 81, 40, 64, 79, 81, 11, 81,

20, 11, 79, 77, 59, 37, 2]])

tensor([25], dtype=torch.int32)

请注意,编码值与 基于字符的编码。

中间表示形式如下所示。

外:

['HH', 'AH', 'L', 'OW', ' ', 'W', 'ER', 'L', 'D', '!', ' ', 'T', 'EH', 'K', 'S', 'T', ' ', 'T', 'AH', ' ', 'S', 'P', 'IY', 'CH', '!']

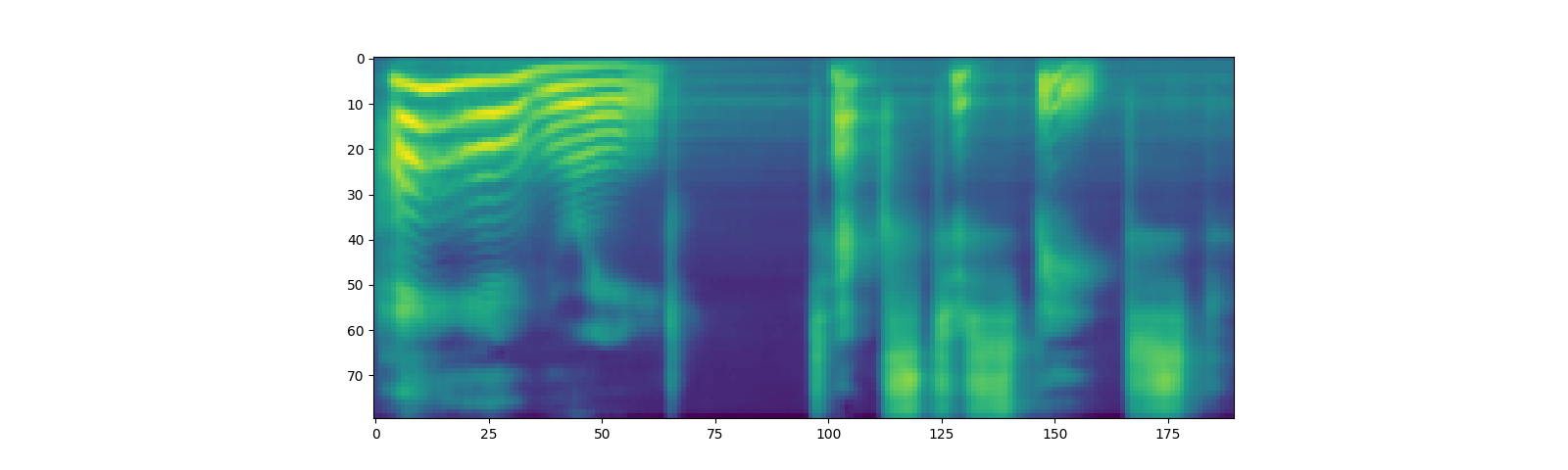

频谱图生成¶

Tacotron2是我们用来从

编码文本。型号详情请参考

纸。

使用预训练权重实例化 Tacotron2 模型很容易, 但是,请注意,需要处理 Tacotron2 模型的输入 通过匹配的文本处理器。

torchaudio.pipelines.Tacotron2TTSBundle()捆绑匹配的

模型和处理器组合在一起,以便轻松创建管道。

有关可用的捆绑包及其用法,请参阅torchaudio.pipelines.

bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_PHONE_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

text = "Hello world! Text to speech!"

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, _, _ = tacotron2.infer(processed, lengths)

plt.imshow(spec[0].cpu().detach())

外:

Downloading: "https://download.pytorch.org/torchaudio/models/tacotron2_english_phonemes_1500_epochs_wavernn_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/tacotron2_english_phonemes_1500_epochs_wavernn_ljspeech.pth

0%| | 0.00/107M [00:00<?, ?B/s]

21%|##1 | 22.9M/107M [00:00<00:00, 240MB/s]

43%|####2 | 46.1M/107M [00:00<00:00, 242MB/s]

64%|######4 | 69.2M/107M [00:00<00:00, 241MB/s]

90%|######### | 96.8M/107M [00:00<00:00, 260MB/s]

100%|##########| 107M/107M [00:00<00:00, 257MB/s]

<matplotlib.image.AxesImage object at 0x7fce43221910>

请注意,method perfos multinomial sampling,

因此,生成频谱图的过程会产生随机性。Tacotron2.infer

外:

torch.Size([80, 155])

torch.Size([80, 167])

torch.Size([80, 164])

波形生成¶

生成频谱图后,最后一个过程是恢复 waveform 来自频谱图。

torchaudio提供基于 和 的声码器。GriffinLimWaveRNN

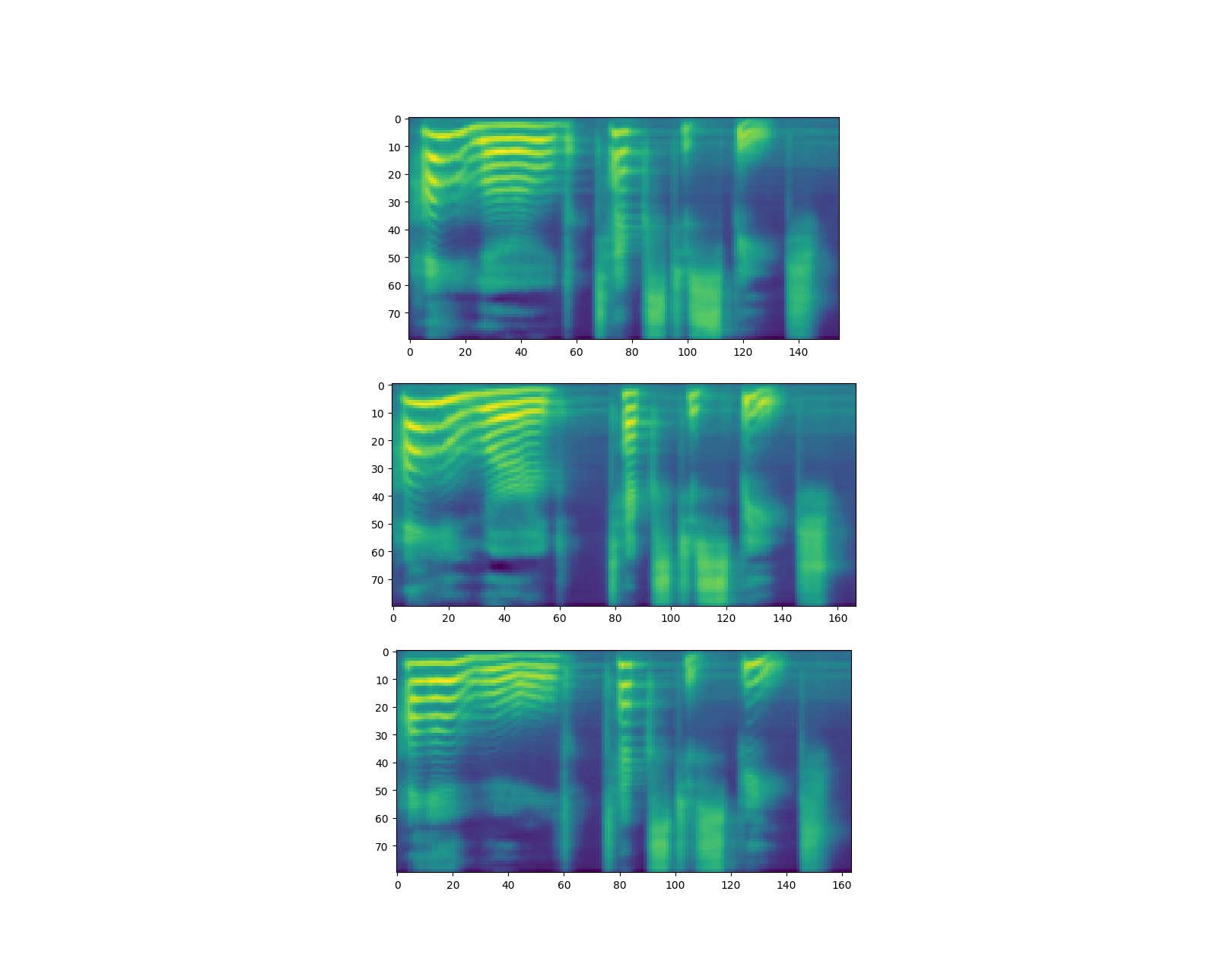

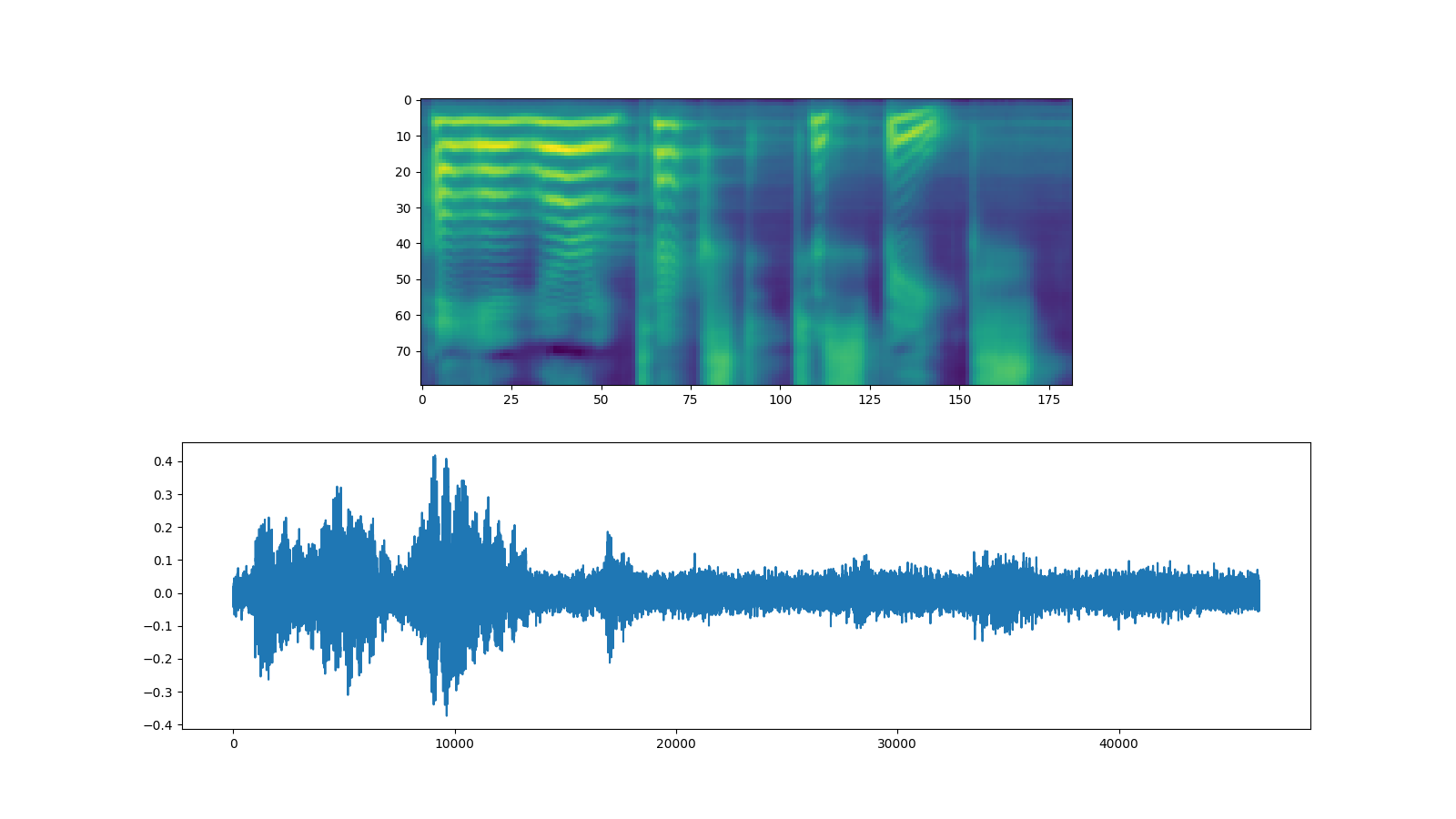

WaveRNN¶

继续上一节,我们可以实例化匹配的 WaveRNN 模型。

bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_PHONE_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

vocoder = bundle.get_vocoder().to(device)

text = "Hello world! Text to speech!"

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, spec_lengths, _ = tacotron2.infer(processed, lengths)

waveforms, lengths = vocoder(spec, spec_lengths)

fig, [ax1, ax2] = plt.subplots(2, 1, figsize=(16, 9))

ax1.imshow(spec[0].cpu().detach())

ax2.plot(waveforms[0].cpu().detach())

torchaudio.save("_assets/output_wavernn.wav", waveforms[0:1].cpu(), sample_rate=vocoder.sample_rate)

IPython.display.Audio("_assets/output_wavernn.wav")

外:

Downloading: "https://download.pytorch.org/torchaudio/models/wavernn_10k_epochs_8bits_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/wavernn_10k_epochs_8bits_ljspeech.pth

0%| | 0.00/16.7M [00:00<?, ?B/s]

29%|##9 | 4.87M/16.7M [00:00<00:00, 37.5MB/s]

96%|#########5| 16.0M/16.7M [00:00<00:00, 70.5MB/s]

100%|##########| 16.7M/16.7M [00:00<00:00, 66.6MB/s]

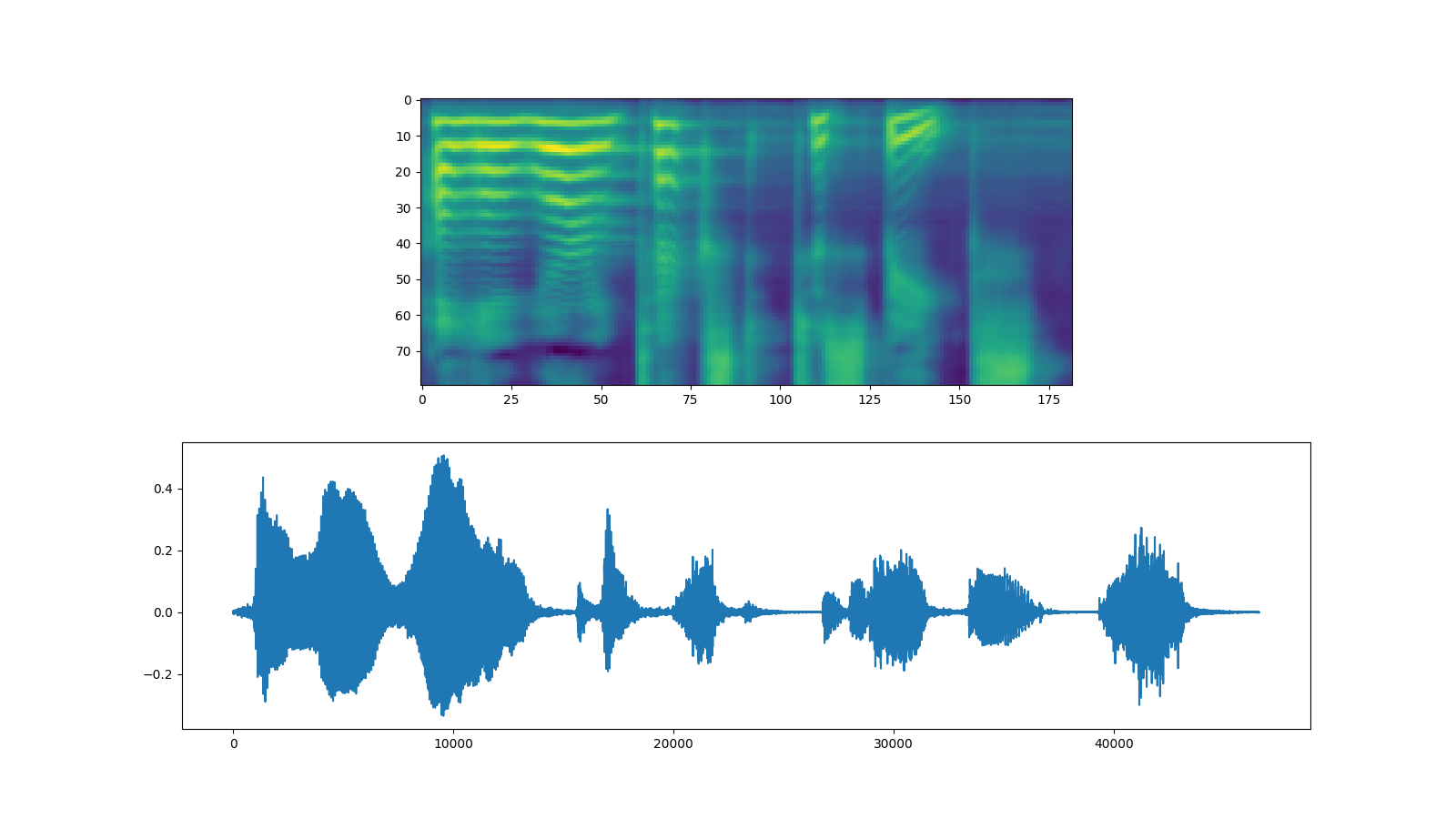

林磊¶

使用 Griffin-Lim 声码器与 WaveRNN 相同。您可以实例化

vocode 对象 和 method 并传递 spectrogram。get_vocoder

bundle = torchaudio.pipelines.TACOTRON2_GRIFFINLIM_PHONE_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

vocoder = bundle.get_vocoder().to(device)

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, spec_lengths, _ = tacotron2.infer(processed, lengths)

waveforms, lengths = vocoder(spec, spec_lengths)

fig, [ax1, ax2] = plt.subplots(2, 1, figsize=(16, 9))

ax1.imshow(spec[0].cpu().detach())

ax2.plot(waveforms[0].cpu().detach())

torchaudio.save(

"_assets/output_griffinlim.wav",

waveforms[0:1].cpu(),

sample_rate=vocoder.sample_rate,

)

IPython.display.Audio("_assets/output_griffinlim.wav")

外:

Downloading: "https://download.pytorch.org/torchaudio/models/tacotron2_english_phonemes_1500_epochs_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/tacotron2_english_phonemes_1500_epochs_ljspeech.pth

0%| | 0.00/107M [00:00<?, ?B/s]

16%|#5 | 16.9M/107M [00:00<00:00, 176MB/s]

32%|###2 | 34.9M/107M [00:00<00:00, 183MB/s]

49%|####8 | 52.3M/107M [00:00<00:00, 178MB/s]

75%|#######5 | 81.1M/107M [00:00<00:00, 226MB/s]

100%|##########| 107M/107M [00:00<00:00, 228MB/s]

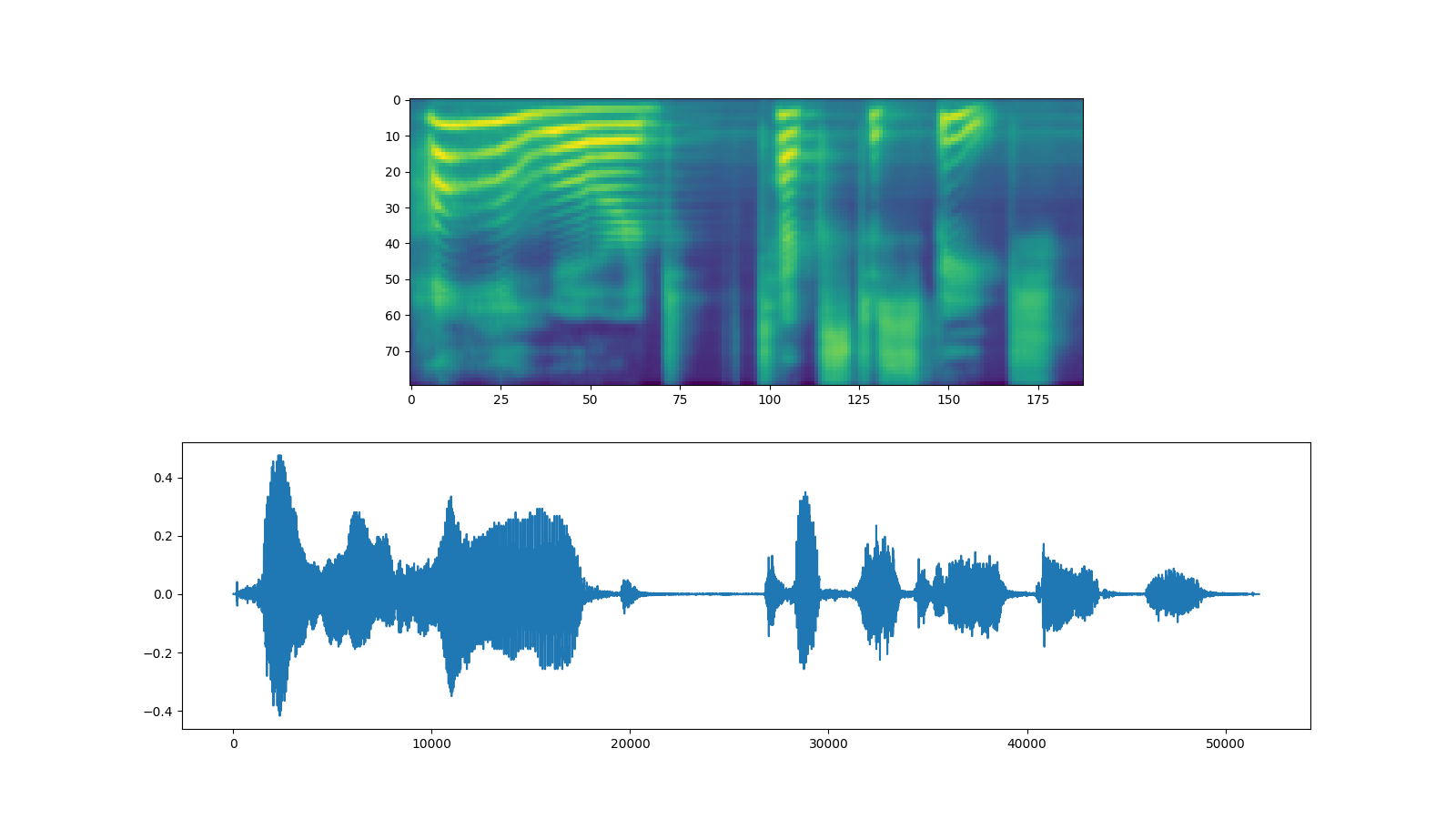

波辉¶

Waveglow 是 Nvidia 发布的声码器。预训练权重为

在 Torch Hub 上发布。可以使用 module 实例化模型。torch.hub

# Workaround to load model mapped on GPU

# https://stackoverflow.com/a/61840832

waveglow = torch.hub.load(

"NVIDIA/DeepLearningExamples:torchhub",

"nvidia_waveglow",

model_math="fp32",

pretrained=False,

)

checkpoint = torch.hub.load_state_dict_from_url(

"https://api.ngc.nvidia.com/v2/models/nvidia/waveglowpyt_fp32/versions/1/files/nvidia_waveglowpyt_fp32_20190306.pth", # noqa: E501

progress=False,

map_location=device,

)

state_dict = {key.replace("module.", ""): value for key, value in checkpoint["state_dict"].items()}

waveglow.load_state_dict(state_dict)

waveglow = waveglow.remove_weightnorm(waveglow)

waveglow = waveglow.to(device)

waveglow.eval()

with torch.no_grad():

waveforms = waveglow.infer(spec)

fig, [ax1, ax2] = plt.subplots(2, 1, figsize=(16, 9))

ax1.imshow(spec[0].cpu().detach())

ax2.plot(waveforms[0].cpu().detach())

torchaudio.save("_assets/output_waveglow.wav", waveforms[0:1].cpu(), sample_rate=22050)

IPython.display.Audio("_assets/output_waveglow.wav")

外:

Downloading: "https://github.com/NVIDIA/DeepLearningExamples/archive/torchhub.zip" to /root/.cache/torch/hub/torchhub.zip

/root/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub/PyTorch/Classification/ConvNets/image_classification/models/common.py:13: UserWarning: pytorch_quantization module not found, quantization will not be available

warnings.warn(

/root/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub/PyTorch/Classification/ConvNets/image_classification/models/efficientnet.py:17: UserWarning: pytorch_quantization module not found, quantization will not be available

warnings.warn(

/root/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub/PyTorch/SpeechSynthesis/Tacotron2/waveglow/model.py:55: UserWarning: torch.qr is deprecated in favor of torch.linalg.qr and will be removed in a future PyTorch release.

The boolean parameter 'some' has been replaced with a string parameter 'mode'.

Q, R = torch.qr(A, some)

should be replaced with

Q, R = torch.linalg.qr(A, 'reduced' if some else 'complete') (Triggered internally at ../aten/src/ATen/native/BatchLinearAlgebra.cpp:1980.)

W = torch.qr(torch.FloatTensor(c, c).normal_())[0]

Downloading: "https://api.ngc.nvidia.com/v2/models/nvidia/waveglowpyt_fp32/versions/1/files/nvidia_waveglowpyt_fp32_20190306.pth" to /root/.cache/torch/hub/checkpoints/nvidia_waveglowpyt_fp32_20190306.pth

脚本总运行时间:(3 分 5.689 秒)