注意

转到末尾下载完整的示例代码。

TorchRL 环境¶

作者: Vincent Moens

环境在 RL 设置中起着至关重要的作用,通常有点类似于 监督式和无监督式设置中的数据集。RL 社区拥有 非常熟悉 OpenAI gym API,它提供了一种灵活的 构建环境,初始化它们并与之交互。然而 存在许多其他库,并且与它们交互的方式可能相当 与 Gym 的预期不同。

让我们首先描述 TorchRL 如何与 gym 交互,这将服务 作为其他框架的介绍。

健身房环境¶

要运行本教程的这一部分,您需要拥有最新版本的 安装了 Gym 库,以及 Atari Suite。你可以得到这个 通过安装以下软件包进行安装:

为了统一所有框架,torchrl 环境在方法内部构建,并使用一个名为

将 arguments 和 keyword arguments 传递给根库构建器。__init___build_env

使用 gym,这意味着构建环境就像以下简单:

import torch

from matplotlib import pyplot as plt

from tensordict import TensorDict

可用环境列表可通过以下命令访问:

list(GymEnv.available_envs)[:10]

['ALE/Adventure-ram-v5', 'ALE/Adventure-v5', 'ALE/AirRaid-ram-v5', 'ALE/AirRaid-v5', 'ALE/Alien-ram-v5', 'ALE/Alien-v5', 'ALE/Amidar-ram-v5', 'ALE/Amidar-v5', 'ALE/Assault-ram-v5', 'ALE/Assault-v5']

环境规格¶

与其他框架一样,TorchRL env 具有指示

空间是观察、行动、完成和奖励的。因为它经常发生

检索到多个观测值,我们期望 Observation Spec

设置为 .

奖励和行动没有此限制:CompositeSpec

print("Env observation_spec: \n", env.observation_spec)

print("Env action_spec: \n", env.action_spec)

print("Env reward_spec: \n", env.reward_spec)

Env observation_spec:

Composite(

observation: BoundedContinuous(

shape=torch.Size([3]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

device=None,

shape=torch.Size([]))

Env action_spec:

BoundedContinuous(

shape=torch.Size([1]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous)

Env reward_spec:

UnboundedContinuous(

shape=torch.Size([1]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous)

这些规范附带了一系列有用的工具:可以断言 sample 位于定义的空间中。我们也可以使用一些启发式方法来投影 如果空间不足,则在空间中的样本,并生成随机(可能 uniformlydistributed 的)数字:

action = torch.ones(1) * 3

print("action is in bounds?\n", bool(env.action_spec.is_in(action)))

print("projected action: \n", env.action_spec.project(action))

action is in bounds?

False

projected action:

tensor([2.])

print("random action: \n", env.action_spec.rand())

random action:

tensor([-0.0048])

在这些规格中,值得特别关注。在 TorchRL 中,

所有环境都写入至少两种类型的轨迹结束信号:(表示马尔可夫决策过程已达到

最终状态 - __episode__已完成)和 ,表示

这是 __trajectory__ 的最后一步(但不一定是

任务)。通常,当 a 的条目是由 signal 引起的。健身房环境占

这三个信号:done_spec"terminated""done""done"True"terminal"False"truncated"

print(env.done_spec)

Composite(

done: Categorical(

shape=torch.Size([1]),

space=CategoricalBox(n=2),

device=cpu,

dtype=torch.bool,

domain=discrete),

terminated: Categorical(

shape=torch.Size([1]),

space=CategoricalBox(n=2),

device=cpu,

dtype=torch.bool,

domain=discrete),

truncated: Categorical(

shape=torch.Size([1]),

space=CategoricalBox(n=2),

device=cpu,

dtype=torch.bool,

domain=discrete),

device=None,

shape=torch.Size([]))

Env 还打包了一个 type 属性,该属性包含作为 env 的所有 spec 的输入

但不是动作。

对于有状态的

envs (例如 gym) 这在大多数情况下都是无效的。

使用无状态环境

(例如 Brax)这还应包括先前状态的表示,

或环境的任何其他输入(包括重置时的输入)。env.state_specCompositeSpec

种子设定、重置和步骤¶

对环境的基本作是 (1) 、 (2) 和 (3) 。set_seedresetstep

让我们看看这些方法如何与 TorchRL 一起工作:

torch.manual_seed(0) # make sure that all torch code is also reproductible

env.set_seed(0)

reset_data = env.reset()

print("reset data", reset_data)

reset data TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

现在,我们可以在环境中执行一个步骤。由于我们没有策略, 我们可以生成一个 random action:

policy = TensorDictModule(env.action_spec.rand, in_keys=[], out_keys=["action"])

policy(reset_data)

tensordict_out = env.step(reset_data)

默认情况下,返回的 tensordict 与 input...step

assert tensordict_out is reset_data

…但有了新密钥

tensordict_out

TensorDict(

fields={

action: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, is_shared=False),

reward: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

我们刚刚所做的(使用 的随机步骤 )也可以是

通过简单的快捷方式完成。action_spec.rand()

env.rand_step()

TensorDict(

fields={

action: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, is_shared=False),

reward: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

新键(与 tensordict 下的所有键一样)在 TorchRL 中具有特殊作用:它们表示它们来了

在名称相同但不带前缀的键之后。("next", "observation")"next"

我们提供了一个函数,用于执行 tensordict 中的一个步骤:

它返回一个新的 Tensordict 更新,使得 t < -t':step_mdp

from torchrl.envs.utils import step_mdp

tensordict_out.set("some other key", torch.randn(1))

tensordict_tprime = step_mdp(tensordict_out)

print(tensordict_tprime)

print(

(

tensordict_tprime.get("observation")

== tensordict_out.get(("next", "observation"))

).all()

)

TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, is_shared=False),

some other key: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

tensor(True)

我们可以观察到,已经删除了所有依赖于时间的

键值对,但不是 .此外,新的

observation 与前一个匹配。step_mdp"some other key"

最后,请注意,该方法还接受 tensordict 来更新:env.reset

data = TensorDict()

assert env.reset(data) is data

data

TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

卷展栏¶

TorchRL 提供的通用环境类允许您运行 rollout 对于给定的步骤数:

tensordict_rollout = env.rollout(max_steps=20, policy=policy)

print(tensordict_rollout)

TensorDict(

fields={

action: Tensor(shape=torch.Size([20, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([20, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([20, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([20, 3]), device=cpu, dtype=torch.float32, is_shared=False),

reward: Tensor(shape=torch.Size([20, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([20, 1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([20, 1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([20]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([20, 3]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([20, 1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([20, 1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([20]),

device=None,

is_shared=False)

生成的 tensordict 具有 of ,即

轨迹的长度。我们可以检查观察结果是否与他们的

下一个值:batch_size[20]

(

tensordict_rollout.get("observation")[1:]

== tensordict_rollout.get(("next", "observation"))[:-1]

).all()

tensor(True)

frame_skip¶

在某些情况下,使用参数来使用

对几个连续帧执行相同的作。frame_skip

生成的 tensordict 将仅包含在 序列,但奖励将按帧数求和。

如果环境在此过程中达到 done 状态,它将停止 并返回截断链的结果。

env = GymEnv("Pendulum-v1", frame_skip=4)

env.reset()

TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

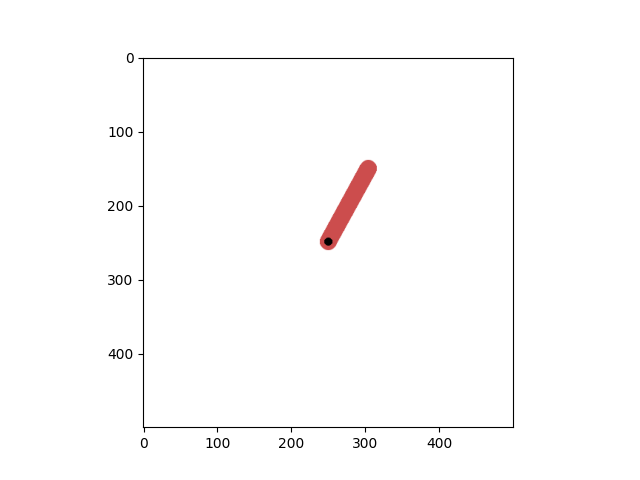

渲染¶

渲染在许多 RL 设置中起着重要作用,这就是为什么

来自 torchrl 的泛型环境类提供了一个关键字

允许用户快速请求基于图像的环境的参数:from_pixels

env = GymEnv("Pendulum-v1", from_pixels=True)

data = env.reset()

env.close()

plt.imshow(data.get("pixels").numpy())

<matplotlib.image.AxesImage object at 0x7fd0118743a0>

我们来看看 tensordict 包含什么:

data

TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

pixels: Tensor(shape=torch.Size([500, 500, 3]), device=cpu, dtype=torch.uint8, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

我们仍然有一个描述过去

describe 中(命名差异来自于

gym 现在返回一个字典,而 TorchRL 从字典中获取名称

如果存在,否则它将步骤输出命名为 :在

寥寥数语,这是由于

健身房环境步骤方法)。"state""observation""observation"

也可以通过仅请求像素来丢弃此补充输出:

env = GymEnv("Pendulum-v1", from_pixels=True, pixels_only=True)

env.reset()

env.close()

某些环境仅采用基于图像的格式

env = GymEnv("ALE/Pong-v5")

print("from pixels: ", env.from_pixels)

print("data: ", env.reset())

env.close()

from pixels: True

data: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

pixels: Tensor(shape=torch.Size([210, 160, 3]), device=cpu, dtype=torch.uint8, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

DeepMind Control 环境¶

- 要运行本教程的这一部分,请确保您已安装 dm_control:

$ pip install dm_control

我们还为 DM Control Suite 提供了一个包装器。同样,构建一个

环境很简单:首先,让我们看看可以访问哪些环境。

现在返回一个包含 envs 和可能任务的 dict:available_envs

from matplotlib import pyplot as plt

from torchrl.envs.libs.dm_control import DMControlEnv

DMControlEnv.available_envs

[('acrobot', ['swingup', 'swingup_sparse']), ('ball_in_cup', ['catch']), ('cartpole', ['balance', 'balance_sparse', 'swingup', 'swingup_sparse', 'three_poles', 'two_poles']), ('cheetah', ['run']), ('finger', ['spin', 'turn_easy', 'turn_hard']), ('fish', ['upright', 'swim']), ('hopper', ['stand', 'hop']), ('humanoid', ['stand', 'walk', 'run', 'run_pure_state']), ('manipulator', ['bring_ball', 'bring_peg', 'insert_ball', 'insert_peg']), ('pendulum', ['swingup']), ('point_mass', ['easy', 'hard']), ('reacher', ['easy', 'hard']), ('swimmer', ['swimmer6', 'swimmer15']), ('walker', ['stand', 'walk', 'run']), ('dog', ['fetch', 'run', 'stand', 'trot', 'walk']), ('humanoid_CMU', ['run', 'stand', 'walk']), ('lqr', ['lqr_2_1', 'lqr_6_2']), ('quadruped', ['escape', 'fetch', 'run', 'walk']), ('stacker', ['stack_2', 'stack_4'])]

env = DMControlEnv("acrobot", "swingup")

data = env.reset()

print("result of reset: ", data)

env.close()

result of reset: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

orientations: Tensor(shape=torch.Size([4]), device=cpu, dtype=torch.float64, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

velocity: Tensor(shape=torch.Size([2]), device=cpu, dtype=torch.float64, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

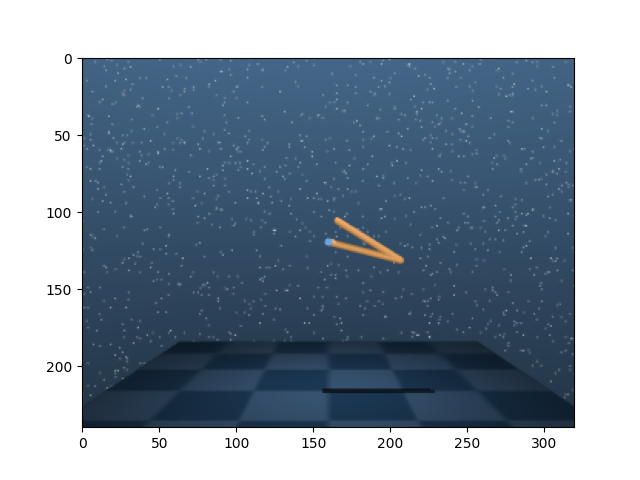

当然,我们也可以使用基于像素的环境:

env = DMControlEnv("acrobot", "swingup", from_pixels=True, pixels_only=True)

data = env.reset()

print("result of reset: ", data)

plt.imshow(data.get("pixels").numpy())

env.close()

result of reset: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

pixels: Tensor(shape=torch.Size([240, 320, 3]), device=cpu, dtype=torch.uint8, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

转换环境¶

在拥有环境之前对环境的输出进行预处理是很常见的 由策略读取或存储在缓冲区中。

- 在许多情况下,RL 社区采用了

env_transformed 美元 = 包装者 1(包装者 2(env))

以转换环境。这有很多优点:它使访问

环境规范 Obvious (外部包装器是

外部世界),并且可以轻松地与 Vectorized 交互

环境。但是,它也使访问内部环境变得困难:

假设你想从链中删除一个包装器(例如 ),

此作需要我们收集wrapper2

$ env0 = env.env.env

env_transformed_bis 美元 = 包装器1(env0)

TorchRL 采取了使用转换序列的立场,因为它是

在其他 PyTorch 域库(例如 )中完成。这

方法也类似于 中变换分布的方式,其中对象是

围绕分布 和 (序列) 构建。torchvisiontorch.distributionTransformedDistributionbase_disttransforms

from torchrl.envs.transforms import ToTensorImage, TransformedEnv

# ToTensorImage transforms a numpy-like image into a tensor one,

env = DMControlEnv("acrobot", "swingup", from_pixels=True, pixels_only=True)

print("reset before transform: ", env.reset())

env = TransformedEnv(env, ToTensorImage())

print("reset after transform: ", env.reset())

env.close()

reset before transform: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

pixels: Tensor(shape=torch.Size([240, 320, 3]), device=cpu, dtype=torch.uint8, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

reset after transform: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

pixels: Tensor(shape=torch.Size([3, 240, 320]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

要组合转换,只需使用类:Compose

from torchrl.envs.transforms import Compose, Resize

env = DMControlEnv("acrobot", "swingup", from_pixels=True, pixels_only=True)

env = TransformedEnv(env, Compose(ToTensorImage(), Resize(32, 32)))

env.reset()

TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

pixels: Tensor(shape=torch.Size([3, 32, 32]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

也可以一次添加一个转换:

TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

pixels: Tensor(shape=torch.Size([1, 32, 32]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

正如预期的那样,元数据也会更新:

print("original obs spec: ", env.base_env.observation_spec)

print("current obs spec: ", env.observation_spec)

original obs spec: Composite(

pixels: UnboundedDiscrete(

shape=torch.Size([240, 320, 3]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([240, 320, 3]), device=cpu, dtype=torch.uint8, contiguous=True),

high=Tensor(shape=torch.Size([240, 320, 3]), device=cpu, dtype=torch.uint8, contiguous=True)),

device=cpu,

dtype=torch.uint8,

domain=discrete),

device=None,

shape=torch.Size([]))

current obs spec: Composite(

pixels: UnboundedContinuous(

shape=torch.Size([1, 32, 32]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([1, 32, 32]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([1, 32, 32]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

device=None,

shape=torch.Size([]))

如果需要,我们还可以连接张量:

from torchrl.envs.transforms import CatTensors

env = DMControlEnv("acrobot", "swingup")

print("keys before concat: ", env.reset())

env = TransformedEnv(

env,

CatTensors(in_keys=["orientations", "velocity"], out_key="observation"),

)

print("keys after concat: ", env.reset())

keys before concat: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

orientations: Tensor(shape=torch.Size([4]), device=cpu, dtype=torch.float64, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

velocity: Tensor(shape=torch.Size([2]), device=cpu, dtype=torch.float64, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

keys after concat: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([6]), device=cpu, dtype=torch.float64, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

此功能使应用于

environment 输入和输出。事实上,转换在 和 之前运行

执行步骤后:对于 pre-step pass,的

keys 将传递给该方法。一个例子

这样的转换将转换浮点作(从

一个神经网络)分配给 double dtype(包装环境需要)。

执行步骤后,该方法将为

对 keys 列表指示的 key 执行。in_keys_inv_inv_apply_transform_apply_transformin_keys

环境变换的另一个有趣特征是它们

允许用户检索 in the wrapped 的等效项

case 中,或者换句话说,父环境。父环境可以

通过调用 来检索 : 返回的环境

将包含在 a 中,其中包含所有转换

(但不包括)当前转换。例如,这用于

case 中,当 reset 执行以下步骤时:

在执行一定数量的步骤之前重置父环境

在那个环境中随机。env.envtransform.parentTransformedEnvironmentNoopResetEnv

env = DMControlEnv("acrobot", "swingup")

env = TransformedEnv(env)

env.append_transform(

CatTensors(in_keys=["orientations", "velocity"], out_key="observation")

)

env.append_transform(GrayScale())

print("env: \n", env)

print("GrayScale transform parent env: \n", env.transform[1].parent)

print("CatTensors transform parent env: \n", env.transform[0].parent)

env:

TransformedEnv(

env=DMControlEnv(env=acrobot, task=swingup, batch_size=torch.Size([])),

transform=Compose(

CatTensors(in_keys=['orientations', 'velocity'], out_key=observation),

GrayScale(keys=['pixels'])))

GrayScale transform parent env:

TransformedEnv(

env=DMControlEnv(env=acrobot, task=swingup, batch_size=torch.Size([])),

transform=Compose(

CatTensors(in_keys=['orientations', 'velocity'], out_key=observation)))

CatTensors transform parent env:

TransformedEnv(

env=DMControlEnv(env=acrobot, task=swingup, batch_size=torch.Size([])),

transform=Compose(

))

环境设备¶

转换可以在设备上工作,这可以带来显著的加速

作对计算要求适中或很高。这些包括 、 等。ToTensorImageResizeGrayScale

人们可以合理地问这对包装环境意味着什么 边。对于常规环境来说,作很少:作仍然会发生 在应该发生的设备上。环境设备 torchrl 中的属性指示传入数据应该在哪个设备上 以及输出数据将在哪个设备上。从那个 Casting from and to that device 是 torchrl 环境类的责任。大 在 GPU 上存储数据的优点是 (1) 如前所述,转换速度加快 以及 (2) 在 multiprocessing 设置中的 worker 之间共享数据。

from torchrl.envs.transforms import CatTensors, GrayScale, TransformedEnv

env = DMControlEnv("acrobot", "swingup")

env = TransformedEnv(env)

env.append_transform(

CatTensors(in_keys=["orientations", "velocity"], out_key="observation")

)

if torch.has_cuda and torch.cuda.device_count():

env.to("cuda:0")

env.reset()

并行运行环境¶

TorchRL 提供了并行运行环境的实用程序。这是意料之中的 各种环境读取并返回形状相似的张量,并且 dtypes(但可以设计掩码函数,以便在 这些张量的形状不同)。创建这样的环境非常简单。 让我们看看最简单的情况:

from torchrl.envs import ParallelEnv

def env_make():

return GymEnv("Pendulum-v1")

parallel_env = ParallelEnv(3, env_make) # -> creates 3 envs in parallel

parallel_env = ParallelEnv(

3, [env_make, env_make, env_make]

) # similar to the previous command

该类与 类类似,但

环境是按顺序运行的事实。这主要适用于

调试目的。SerialEnvParallelEnv

ParallelEnv实例是在 Lazy 模式下创建的:环境将

仅在被调用时开始运行。这允许我们在进程之间移动对象,而不必太担心运行

过程。A 可以通过调用 来启动,也可以简单地通过调用 (如果不需要先调用) 来启动。ParallelEnvParallelEnvstartresetstepreset

parallel_env.reset()

TensorDict(

fields={

done: Tensor(shape=torch.Size([3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3, 3]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([3, 1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([3]),

device=None,

is_shared=False)

可以检查并行环境是否具有正确的批处理大小。

通常,the 的第一部分表示批处理

第二个是时间范围。让我们用方法检查一下:batch_sizerollout

parallel_env.rollout(max_steps=20)

TensorDict(

fields={

action: Tensor(shape=torch.Size([3, 20, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([3, 20, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([3, 20, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3, 20, 3]), device=cpu, dtype=torch.float32, is_shared=False),

reward: Tensor(shape=torch.Size([3, 20, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([3, 20, 1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([3, 20, 1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([3, 20]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([3, 20, 3]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([3, 20, 1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([3, 20, 1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([3, 20]),

device=None,

is_shared=False)

关闭并行环境¶

重要说明:在关闭程序之前,请务必关闭

parallel 环境。一般来说,即使在常规环境中,它也很好

练习通过调用 .在某些情况下,

如果不这样做,TorchRL 将抛出一个错误(通常位于

当环境超出范围时,程序结束!close

parallel_env.close()

播种¶

在播种并行环境时,我们面临的困难是

希望为所有环境提供相同的种子。使用的启发式方法

TorchRL 是我们在给定输入的情况下生成一个确定性的种子链

种子,这样它就可以被重建

从其任何元素中。所有方法都会将下一个种子返回给

,这样就可以很容易地在给定最后一个种子的情况下保持链条继续运行。

当多个收集器都包含一个实例,并且我们希望每个子子环境都有不同的种子时,这非常有用。set_seedParallelEnv

out_seed = parallel_env.set_seed(10)

print(out_seed)

del parallel_env

3288080526

访问环境属性¶

有时会发生包装环境具有 利息。首先,请注意 TorchRL 环境包装器限制了工具 以访问此属性。下面是一个示例:

from time import sleep

from uuid import uuid1

def env_make():

env = GymEnv("Pendulum-v1")

env._env.foo = f"bar_{uuid1()}"

env._env.get_something = lambda r: r + 1

return env

env = env_make()

# Goes through env._env

env.foo

'bar_bd7cd616-bece-11ef-b619-0242ac110002'

parallel_env = ParallelEnv(3, env_make) # -> creates 3 envs in parallel

# env has not been started --> error:

try:

parallel_env.foo

except RuntimeError:

print("Aargh what did I do!")

sleep(2) # make sure we don't get ahead of ourselves

Aargh what did I do!

if parallel_env.is_closed:

parallel_env.start()

foo_list = parallel_env.foo

foo_list # needs to be instantiated, for instance using list

<torchrl.envs.batched_envs._dispatch_caller_parallel object at 0x7fd010f2e2f0>

list(foo_list)

['bar_c24373ee-bece-11ef-a45f-0242ac110002', 'bar_c246e600-bece-11ef-8a72-0242ac110002', 'bar_c245b17c-bece-11ef-9b72-0242ac110002']

同样,也可以访问方法:

something = parallel_env.get_something(0)

print(something)

[1, 1, 1]

parallel_env.close()

del parallel_env

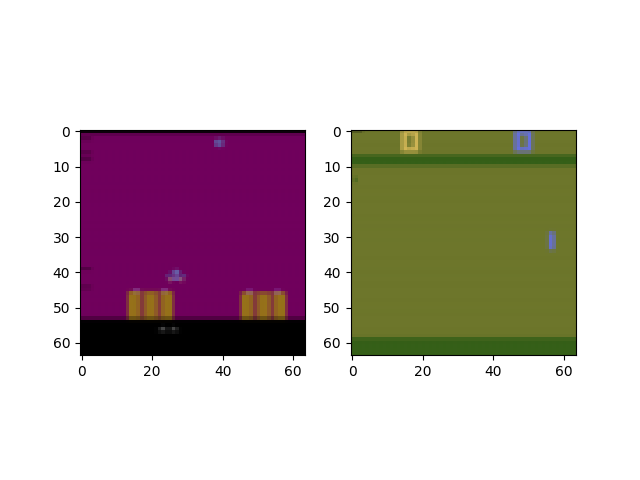

用于并行环境的 kwargs¶

您可能希望为各种环境提供 kwargs。这可以实现 在施工时或施工后:

from torchrl.envs import ParallelEnv

def env_make(env_name):

env = TransformedEnv(

GymEnv(env_name, from_pixels=True, pixels_only=True),

Compose(ToTensorImage(), Resize(64, 64)),

)

return env

parallel_env = ParallelEnv(

2,

[env_make, env_make],

create_env_kwargs=[{"env_name": "ALE/AirRaid-v5"}, {"env_name": "ALE/Pong-v5"}],

)

data = parallel_env.reset()

plt.figure()

plt.subplot(121)

plt.imshow(data[0].get("pixels").permute(1, 2, 0).numpy())

plt.subplot(122)

plt.imshow(data[1].get("pixels").permute(1, 2, 0).numpy())

parallel_env.close()

del parallel_env

from matplotlib import pyplot as plt

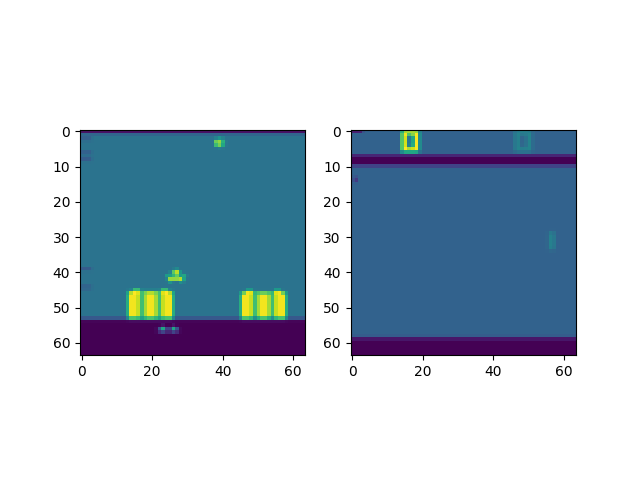

转变并行环境¶

转换并行环境有两种等效的方法:在每个 process 单独处理,或在 main process 上处理。甚至可以两者兼而有之。 因此,可以仔细考虑转换设计以利用 设备功能(例如 CUDA 设备上的转换)和矢量化 对主进程的作(如果可能)。

from torchrl.envs import (

Compose,

GrayScale,

ParallelEnv,

Resize,

ToTensorImage,

TransformedEnv,

)

def env_make(env_name):

env = TransformedEnv(

GymEnv(env_name, from_pixels=True, pixels_only=True),

Compose(ToTensorImage(), Resize(64, 64)),

) # transforms on remote processes

return env

parallel_env = ParallelEnv(

2,

[env_make, env_make],

create_env_kwargs=[{"env_name": "ALE/AirRaid-v5"}, {"env_name": "ALE/Pong-v5"}],

)

parallel_env = TransformedEnv(parallel_env, GrayScale()) # transforms on main process

data = parallel_env.reset()

print("grayscale data: ", data)

plt.figure()

plt.subplot(121)

plt.imshow(data[0].get("pixels").permute(1, 2, 0).numpy())

plt.subplot(122)

plt.imshow(data[1].get("pixels").permute(1, 2, 0).numpy())

parallel_env.close()

del parallel_env

grayscale data: TensorDict(

fields={

done: Tensor(shape=torch.Size([2, 1]), device=cpu, dtype=torch.bool, is_shared=False),

pixels: Tensor(shape=torch.Size([2, 1, 64, 64]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([2, 1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([2, 1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([2]),

device=None,

is_shared=False)

VecNorm¶

在 RL 中,我们经常面临在输入之前对数据进行归一化的问题 它们被归类为模型。有时,我们可以得到 使用一个 随机策略(或示威)。但是,最好 “动态”标准化数据,更新标准化常量 逐渐达到迄今为止观察到的情况。这尤其 当我们预计 Normalizing 统计数据会发生变化时很有用 任务中的性能变化,或者环境演变时的变化 由于外部因素。

注意:在 off-policy 的情况下,应谨慎使用此功能 学习,因为旧数据将被“弃用”,因为它与 以前有效的规范化统计数据。在策略设置中,此 特征使学习不稳定,并可能产生意想不到的效果。一 因此,建议用户谨慎依赖此功能并进行比较 it 和数据归一化给定标准化常量的固定版本。

在常规设置中,使用 VecNorm 非常简单:

from torchrl.envs.libs.gym import GymEnv

from torchrl.envs.transforms import TransformedEnv, VecNorm

env = TransformedEnv(GymEnv("Pendulum-v1"), VecNorm())

data = env.rollout(max_steps=100)

print("mean: :", data.get("observation").mean(0)) # Approx 0

print("std: :", data.get("observation").std(0)) # Approx 1

mean: : tensor([-0.3473, -0.0822, -0.1379])

std: : tensor([1.0996, 1.2535, 1.2265])

在 parallel envs 中,事情稍微复杂一些,因为我们需要

在进程之间共享运行统计信息。我们创建了一个负责查看环境创建的类

方法,检索 Tensordict 以在环境中的进程之间共享

类,并将每个进程指向正确的公共共享数据

创建后:EnvCreator

from torchrl.envs import EnvCreator, ParallelEnv

from torchrl.envs.libs.gym import GymEnv

from torchrl.envs.transforms import TransformedEnv, VecNorm

make_env = EnvCreator(lambda: TransformedEnv(GymEnv("CartPole-v1"), VecNorm(decay=1.0)))

env = ParallelEnv(3, make_env)

print("env state dict:")

sd = TensorDict(make_env.state_dict())

print(sd)

# Zeroes all tensors

sd *= 0

data = env.rollout(max_steps=5)

print("data: ", data)

print("mean: :", data.get("observation").view(-1, 3).mean(0)) # Approx 0

print("std: :", data.get("observation").view(-1, 3).std(0)) # Approx 1

env state dict:

TensorDict(

fields={

_extra_state: TensorDict(

fields={

observation_count: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

observation_ssq: Tensor(shape=torch.Size([4]), device=cpu, dtype=torch.float32, is_shared=False),

observation_sum: Tensor(shape=torch.Size([4]), device=cpu, dtype=torch.float32, is_shared=False),

reward_count: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

reward_ssq: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

reward_sum: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

data: TensorDict(

fields={

action: Tensor(shape=torch.Size([3, 5, 2]), device=cpu, dtype=torch.int64, is_shared=False),

done: Tensor(shape=torch.Size([3, 5, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([3, 5, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([3, 5, 4]), device=cpu, dtype=torch.float32, is_shared=False),

reward: Tensor(shape=torch.Size([3, 5, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([3, 5, 1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([3, 5, 1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([3, 5]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([3, 5, 4]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([3, 5, 1]), device=cpu, dtype=torch.bool, is_shared=False),

truncated: Tensor(shape=torch.Size([3, 5, 1]), device=cpu, dtype=torch.bool, is_shared=False)},

batch_size=torch.Size([3, 5]),

device=None,

is_shared=False)

mean: : tensor([-0.2283, 0.1333, 0.0510])

std: : tensor([1.1642, 1.1120, 1.0838])

计数略高于步数(因为我们

没有使用任何衰减)。两者之间的差异是由于事实

这将创建一个虚拟环境来初始化用于从分散环境中收集数据的共享环境。

这种微小的差异通常会在整个训练过程中被吸收。ParallelEnvTensorDict

print(

"update counts: ",

make_env.state_dict()["_extra_state"]["observation_count"],

)

env.close()

del env

update counts: tensor([18.])

脚本总运行时间:(3 分 45.424 秒)

估计内存使用量:317 MB